Engineers say the best way to make vehicles fully autonomous is to analyze all sensor signals and driving situations with a centralized processor.

AMIN KASHI, MATTHIAS POLLACH, NIZAR SALLEM, Mentor Graphics, a Siemens Business

It’s no secret that elbow room may be growing scarce among the companies working on self-driving tech. A Comet Labs tally published by Wired magazine identified 263 firms “racing toward autonomous cars,” though surely only a fraction will reach the finish line.

Meanwhile, the tech utopian ideal — full Level-Five autonomy (meaning the vehicle can safely drive itself with no human input in any/all conditions) — remains years away. There are numerous thorny engineering problems yet to solve. Among them are nitty-gritty details relating to power consumption, signal latency, system integration, manufacturability, and cost.

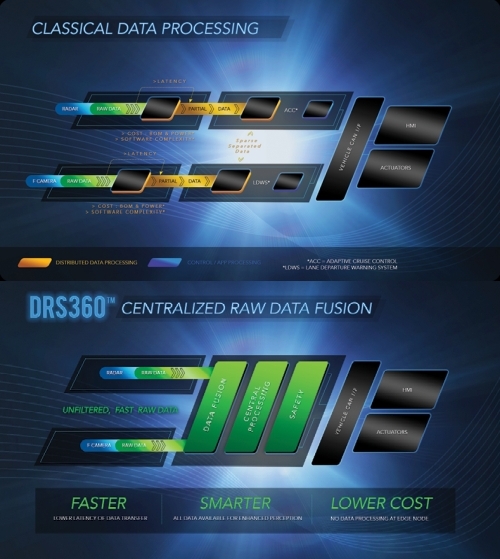

The media sometimes erroneously suggests that the basic approaches to solving these problems are essentially interchangeable. In fact, there are major differences in how to best solve self-driving car challenges. One example is Mentor’s 2017 introduction of its DRS360 Autonomous Driving Platform. Many in the industry appear to be working on scaling-up existing ADAS systems, but Mentor’s approach takes a different and more direct path to Level Five, one that leans heavily on the centralized fusion of raw sensor data.

DRS360 applies advanced machine learning technology to narrow slices of fused data which leads to efficient and high-confidence object recognition. Accurate object recognition is key to hands-off driving in all conditions. The approach taken is to broadly acquire data from multiple sensor modalities deployed all around the car, then identify, classify and act upon only those objects and events of relevance to autonomous driving policy. Not incidentally, this approach mimics the sensory perception and decision-making of human drivers. And the production-intent nature of the DRS360 platform is relatively open and agnostic to other hardware and software used to implement self-driving. Consequently, developers avoid the black-box, vendor-lock-in problem inherent in other solutions.

In short, as autonomous driving moves from hype to the hard work of getting past level-two autonomy, the centralized, raw-data platform approach may well represent the surest means to get there.

SCALING UP DISTRIBUTED ADAS DOESN’T WORK

In recent years ADAS (advanced driver assistance systems) have proliferated, even in new, economy class vehicles. For example, for just $1,000, buyers of modest Honda Civic sedans can add the Honda Sensing system, which includes adaptive cruise control, lane-keeping-assist, forward collision warning, and more.

The cameras and sensors that make ADAS possible all come with onboard hardware and software for processing data captured as the car moves down the road. OEMs and Tier Ones implementing complex ADAS systems must integrate the output of all these devices. Camera and sensor systems typically use microcontrollers to filter and process data before sending it via CAN bus to a central processor. But the precise way this filtering takes place is opaque to carmakers. In addition, the processing of data within the sensor nodes boosts system software complexity and adds cost, especially with the extensive verification processes necessary to ensure the code meets strict automotive standards.

Power and consequent thermal challenges pose related problems. It’s not practical to actively solve thermal issues in computing systems (by deploying fans or water cooling) because the additional equipment adds more risk of component failure, maintenance complexity, and noise.

Obviously, power consumption will be a major factor in electric autonomous vehicles because of range limitations and other issues. Less obvious is how power is a gating factor for internal combustion engines, too. Power-hungry ADAS may force a re-architecting of cabling for the vehicle electrical distribution system (EDS), exceptionally expensive in both time and cost.

Today the amount of data sensors generate is a choke point for decision-making algorithms. Indeed it seems inevitable that the eyes and ears of Level-Five vehicles, whenever they arrive, will carry an array of simplified, microcontroller-less “raw data” sensor modules that leave most of the processing and decision making to a central processing engine, much as the central nervous system works in humans.

Unlike traditional, distributed computing approaches, a platform based on raw data uses the entirety of all captured data to generate the most complete view of the vehicle’s environment. Driving decisions are made based on the full availability and comprehension of all data at hand—data that’s accurate, complete and unbiased/unfiltered. In object recognition, targeting the most relevant data produces better, higher-confidence results. And this can only happen if you preserve all the data until it’s time to use it.

Utilizing unfiltered data is the ideal way to build a rich, spatially and temporally synced view of the car’s surrounding environment – a goal made feasible by combining FPGA and CPU technologies on the same platform.

Autonomous vehicles based on the centralized, raw-data platform approach are fundamentally agnostic in terms of sensor vendor. Nearly any camera, lidar, radar or other sensor types can be adapted to the platform, which is open and transparent. Mentor’s sensor fusion and object recognition algorithms are advanced, but carmakers and Tier Ones are free to apply their own algorithms and even chose their own chips and SoCs for the processing. Several major sensor systems only work with particular chipsets and GPUs, another form of vendor lock-in. And the major sensor vendors often require contracts for bulk purchases over relatively long periods, a potential risk given the fast-changing market landscape. The new platform is also a boon to sensor vendors whose core competencies are usually in hardware rather than software.

Centralized, raw-data platforms provide an efficient way to identify events and regions of interest around a vehicle, and then combine the raw data from various sensor modalities to classify objects and ultimately make decisions about these events. This is important because each sensor comes with specific limitations. Lidar systems aren’t useful for detecting objects close to the car, may have difficulties in fog and rain, and tend to be large and expensive, though prices are dropping. Radar does better in inclement weather and is less expensive than lidar, but generally has lower resolution and is still unreliable at very short distances.

Cameras are among the most commonly deployed sensors today. However, vision sensors capture both useful and non-useful information without discrimination (the sky is of a little interest to a car), and optimal image sensing depends on lighting conditions.

Today most of these sensor types use onboard microcontrollers to preprocess and filter data before sending it downstream. The problem is that independently handling tasks like object tracking or scene flow segmentation boosts power consumption, processing requirements and most important, latency. Object tracking, for example, requires analyzing a sequence of frame data over time.

A better approach is to temporally and spatially sync all data from the car’s sensors in a single frame, narrow in on an object in a region of interest, and then examine the slice of fused data to perceive what’s going on just in that region. By dynamically processing only the data relevant to the decision at hand, centralized raw data platforms can make good driving decisions while costing less while using less power and space.

From a latency perspective, centralized, raw data platforms also excel. The platform employs sensors which are unburdened by the power, cost and size penalties of microcontrollers at each sensor nodes. There is no pre-processing at the sensor node, thereby reducing latency.

The Mentor platform is based on a streamlined data transport architecture which further lowers system latency by minimizing physical bus structures, hardware interfaces and complex, time-triggered Ethernet backbones. The FPGA-centric approach provides the highest possible signal processing performance. And fourth, all processing is handled in centralized fashion, avoiding latency resulting from data swapping, which is inherent in traditional, non-centralized approaches.

Neural networks and machine learning, of course, loom increasingly large in autonomous driving. It’s problematic and ultimately unrealistic to dump all sensor data to one deterministic system that must see every situation at least once before learning. One issue is the incredible overhead spent processing utterly useless information. Another is the black-box challenge of never knowing precisely what such a system is learning from the data fed into it, and the impossibility of ever reproducing all the data that went into making a certain machine-generated rule. This sort of lack of transparency is forbidden within standards like ISO 26262.

Centralized, raw-data platforms represent the “anti-black box” approach, which means customers can keep and access the highly valuable sensor data and other data flowing through the platform. The systems are also designed to allow OEMs and Tier Ones to customize or extend the algorithms used within the platform.

Centralized, raw-data platforms imply that the much better method is to not worry about classifying all vehicle surroundings in detail. Just as human drivers do, an autonomous driving system should invest resources mostly in those tasks that are central and safety-critical to the driving mission (which, for lack of a better description is safely traveling the roadways from point A to point B). This means separating out general situation awareness (modest focus), event detection and perception (more serious attention), and object classification (most critical).

For example, suppose you’re traveling down the road and a car merges into your lane in front of you. What’s happening two lanes over or several vehicles behind you matters little compared to how fast the merging vehicle is traveling, whether its brake lights are on, and whether you’ll have to slow down. Human drivers generally focus on such a situation automatically. Level Five autonomous vehicles ultimately will need to do the same.

THE SIGNAL AND THE NOISE

As sensors and instrumentation proliferate on vehicles, much is made of the issue of so-called data exhaust. Intel CEO Brian Krzanich made news last year when he predicted that the average self-driving car in 2020 would generate 4,000 gigabytes of data per day, roughly the daily data generated by 3,000 internet users. Skeptics like to ask about the point of collecting raw sensor data because there is no way to process it in real time. The answer is that there’s no need to consume all the raw data in real time — the important point is that all the data is there when it’s needed most.

The iterative approach to scaling up ADAS won’t work in the end. Far better to build new, highly open and flexible systems from scratch designed for level Five (but that allow OEMs to work in parallel and field systems in Level 2-4 along the way). Indeed, automotive engineers mostly agree on the technical superiority of raw data sensor fusion, though to-date Mentor is the only firm that has gone to the trouble of building a platform that implements this approach.

So, by far the biggest technical hurdle for Level Five — one that Mentor’s DRS360 platform based on centralized, raw-data fusion clears by a mile — is the need for new architectures that emulate a human way of fusing disparate information and focusing attention where it matters. Of course, this is a matter of life and death for drivers and others on the road.

Leave a Reply