Imagimob announced that its tinyML platform Imagimob AI supports quantization of so-called Long Short-Term Memory (LSTM) layers and a number of other Tensorflow layers. LSTM layers are well-suited to classify, process, and make predictions based on time series data, and are therefore of great value when building tinyML applications. The Imagimob AI software with quantization was first shipped to a Fortune Global 500 customer in November and is since then in production. Currently, few other machine learning frameworks/platforms support the quantization of LSTM.

Imagimob announced that its tinyML platform Imagimob AI supports quantization of so-called Long Short-Term Memory (LSTM) layers and a number of other Tensorflow layers. LSTM layers are well-suited to classify, process, and make predictions based on time series data, and are therefore of great value when building tinyML applications. The Imagimob AI software with quantization was first shipped to a Fortune Global 500 customer in November and is since then in production. Currently, few other machine learning frameworks/platforms support the quantization of LSTM.

Imagimob AI takes a Tensorflow/Keras h5-file and converts it to a single quantized, self-contained, C-code source file and its accompanying header file at the click of a button. No external runtime library is needed.

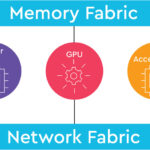

In tinyML applications, the main reason for quantization is that it reduces memory footprint and reduces the performance requirements on the MCU. That also allows tinyML applications to run on MCUs without an FPU (Floating Point Unit), which means that customers can lower the costs for device hardware.

Quantization refers to techniques for performing computations and storing tensors at lower bit widths than floating-point precision. A quantized model executes some or all of the operations on tensors with integers rather than floating-point values. This allows for a more compact model representation and the use of high-performance vectorized operations on many hardware platforms. This technique is particularly useful at the inference time since it saves a lot of inference computation cost without sacrificing too much inference accuracy. In essence, it’s the process of converting the floating unit-based models into integer ones and downgrading the unit resolution from 32 to 16 or 8 bits.

Initial benchmarking of an AI model including LSTM layers between a Non-quantized and a quantized model running on an MCU without FPU show that the inference time for the quantized model is around 6 times faster as shown below and that RAM memory requirement is reduced by 50 % for the quantized model when using 16-bit integer representation.

Leave a Reply