Flex Logix Technologies, Inc. announced the availability and roadmap of PCIe boards powered by the Flex Logix InferX X1 accelerator – the industry’s fastest and most efficient AI inference chip for edge systems.

Flex Logix Technologies, Inc. announced the availability and roadmap of PCIe boards powered by the Flex Logix InferX X1 accelerator – the industry’s fastest and most efficient AI inference chip for edge systems.

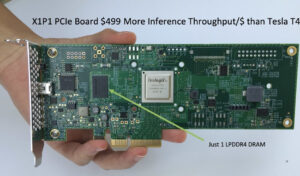

The InferX X1P1 uses a single InferX X1 chip and a single LPDDR4x DRAM on a half-height, half-length PCIe board. Samples are available now to lead customers; general sampling will occur in Q1 and product availability in Q2 2021. The InferX X1P4 will have four InferX X1 chips on the same size board: half-height, half-length and will sample mid-2021 with production by end of 2021. Finally, an InferX M.2 board will be available in the same time frame as X1P4.

The InferX X1P4 will have throughput on YOLOv3, for object detection and recognition, similar to the Nvidia Tesla T4 at volume pricing from $649-$999. Other real customer models run faster on X1P4 than T4. The InferX X1P1 is about 1/3 the performance of Tesla T4 for YOLOv3 but sells for $399-$499. For some customer models, the X1P1 outperforms the T4. InferX M.2 pricing will be similar to the X1P1 and has the same performance.

The new InferX PCIe boards deliver higher throughput/$ for lower price point servers. Boards announced include the following: InferX X1P1 PCIe board – x4 PCIe GEN3/4 board featuring 19W TDP; InferX X1P4 PCIe board – This <75W TDP board is x8 PCIe GEN3/4; InferX X1M M.2 board – This 19W TDP board at M.2 22x80mm is an x4 PCIe GEN3/4

Flex Logix is also unveiling a suite of software tools to accompany the boards. This includes Compiler Flow from TensorFlowLite/ONNX models and a nnMAX Runtime Application. Also included in the software tools is an InferX X1 driver with external APIs designed for applications to easily configure & deploy models, as well as internal APIs for handling low-level functions designed to control & monitor the X1 board.

933MHz will be available in the second half of 2021.

Leave a Reply