Best practices for protecting vehicles from hackers now include advanced verification techniques.

John Hallman, David Landoll, Siemens EDA

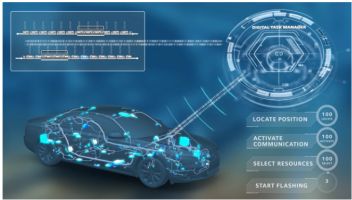

It’s no secret that much of the control for modern vehicles takes place via ICs, and any access to them may give an outsider uninvited control of the car. It is a challenge to identify the security threats associated with the modern automobile and ensure the vehicle and occupants are protected. Because of the numerous capabilities incorporated into vehicle electronics, the number of security threats are quite high, especially when low-level IC implementation vulnerabilities are included in the analysis.

It may be helpful for engineers working in this field to state the end goals or consequences of a security exploit in simple terms. The most common results of a security breach in a vehicle includes (1) risk to personal safety, (2) financial loss, (3) exposure of personal information, and (4) operational failure of a function in the vehicle itself. Developers who simply address all known threats may not have enough resources available to meet what are typically aggressive time-to-market objectives. Therefore, the use of security breach consequences will help prioritize and mitigate those threats which are most relevant.

It may be helpful for engineers working in this field to state the end goals or consequences of a security exploit in simple terms. The most common results of a security breach in a vehicle includes (1) risk to personal safety, (2) financial loss, (3) exposure of personal information, and (4) operational failure of a function in the vehicle itself. Developers who simply address all known threats may not have enough resources available to meet what are typically aggressive time-to-market objectives. Therefore, the use of security breach consequences will help prioritize and mitigate those threats which are most relevant.

Commonly implemented system engineering practices, security requirements and consequent mitigations flow down to the IC level. Those mitigations may take on many different forms. An important reference for automotive security engineering is the standard “ISO/SAE DIS 21434 Road vehicles – Cybersecurity engineering.” Implementation of secure design structures such as trusted platform modules (TPM), data encryption blocks, or redundant data processing paths are just a few examples of designed-in security.

There are many best practices available to help mitigate the threats to a vehicle, but here we focus on security mitigation verification and the need for more advanced verification methods.

For decades the primary verification methods for systems and digital IC functions have been simulation-based techniques. Simulation relies on a testbench (basically a container where the design is placed and driven with different input stimulus) and input stimulus. The stimulus interacts with a digital model of the design, attempting to emulate the behavior of the real-world environment where the IC will operate. Simpler testbench methods, known as directed tests, simply rely on humans to manually define a specific sequence of test vectors to stimulate the design with a stream of inputs, meanwhile checking that the design responds correctly to that specific sequence.

The obvious limitation of this method is that it only tests for specific behaviors in the specific order and timing as defined by the human-created test vectors. More sophisticated simulation-based techniques attempt to get around this limitation by incorporating constrained-random stimulus, typically written in a more advanced testbench language such as SystemVerilog or System C. Constrained-random stimulus allows the testbench to vary the exact timing, sequence, and content of various types of transactions and input signals. Correct design behavior is typically checked against a reference model that predicts how the design should respond to the constrained random stimulus.

This procedure raises the question, “How do I know what this test bench verified?”. The answer is typically addressed through various types of coverage analysis that measure how many times different transactions were tried with what types of content. Various types of “cross coverage” can also be employed (measuring, for example, how many times a particular transaction happened while an interrupt was simultaneously received, or even just toggle coverage of a register or signal). This type of procedure is a widely used for modern IC verification.

But this methodology still falls short. It only checks the IC behaviors the testbench was written to stimulate. And it only varies the stimulus within the range it was programmed to check (and typically comes well short of exhaustive function verification). Moreover, it only measures whatever coverage aspects were manually specified. Quite simply, simulation-based verification techniques can only stimulate a finite amount of behavior. Consequently, a particular IC may be well verified against its requirements but it may misbehave when stimulated beyond the operational expectations incorporated into the test bench stimulus.

That’s a problem because many modern security breaches take advantage of an IC’s unexpected response to unexpected stimulus. An attacker’s goal is to identify stimulus behaviors that may be used to get the design to do something unexpected. In many cases, a bug or weakness in the IC design that was not caught during the verification process offers a prime opportunity for exploit. In the simplest case, the attacker can activate a bug or unwanted function in the design that launches the hardware into a vulnerable state. Examples of such vulnerabilities include a FIFO overflow or a state machine deadlock.

The attacker may also activate design circuitry that was not intended to be available for general use, such as activating early prototype logic or test-mode logic that allows access to otherwise secure areas of a device. In other cases, various weaknesses can be created simply leaving in place code needed for development but then abandoned without removal – for example leaving in extra unused states for a state machine later revised to utilize fewer states. Finally, a project engineer with nefarious intent could insert functions for future hacks, such as adding a “back door” with an address and un-lock key only that individual knows.

The only way to guard against these vulnerabilities is to verify all possible behavior across all time. Unfortunately, in modern ICs this extensive verification is inherently intractable via simulation-based techniques. It would be analogous to painting all the buildings in New York City with a single toothbrush – theoretically possible, but impossible in any real-world sense. Thus additional methods to complement simulation are much needed to address threats and vulnerability.

Benefits of formal methods

Thankfully there is a verification approach that complements simulation and is well suited to ferreting out unpredictable problems. Formal verification makes use of exhaustive mathematical algorithms to verify the functionality of the design against its requirements and has been around for decades. Because the process is exhaustive, the answer to the question, “Does this design always respond correctly?” is guaranteed to be complete – for all possible stimulus across all time. That is, if a digital hardware design can exhibit any undesirable behavior, formal methods will point them out.

On the other hand, if no such undesirable behavior exists, then formal methods will say so. When used during the design phase of a project, a hardware engineer can start verifying the design before any simulation-based test bench is available. Formal verification will create exhaustive stimulus automatically. The engineer simply must ask some common behavior questions about the design, such as, “Does my FIFO always behave properly without ever overflowing?” or, “Will this state machine always transition properly without EVER get dead-locked?” or, “Will my design ALWAYS elegantly detect and respond to ALL possible legal AND illegal instructions?”, and so forth. These types of behavior are easily verified using formal verification.

Imagine a situation where an automotive system’s memory element is protected by an ECC (Error Correction Code). The point of the ECC is to detect, and possibly correct, memory bits corrupted by electromagnetic interference. But what if an error in the memory went undetected? This condition could lead to the execution of an illegal instruction, or erroneous or erratic behavior by an autonomous system. This type of error recovery logic is difficult to thoroughly verify through traditional non-formal verification techniques because of the sheer number of possible failures combined with the possible timing of these failures. Simulation would require an intractable amount of time to check even a small minority of possible situations, so it’s easy for problems in this logic to go undetected through the hardware design and verification process. Formal verification can, however, exhaustively verify all possible functional behaviors across all time to ensure the resulting error detection and recovery logic will always respond correctly.

Imagine another situation involving the car’s braking system. Suppose an IC aggregates brake control from an autonomous on-board self-driving system, from a driver-initiated manual override, and from a system-failure fail-safe mechanism in the event of a problem. If these higher-level systems all start vying for control of the brakes, it’s imperative that the IC handles the braking system in a predictable way. However, the possible interactions of these systems are complex – Add in possible nefarious system hacking by a bad actor, or any other possible hidden or unspecified functions, and the verification task simply becomes intractable through traditional mechanisms. Simulation test vectors would not be able to realize a high degree of coverage in a tractable amount of time.

However, formal verification can exhaustively verify all the possible control interactions through properties and functional descriptions, ensuring the system will always behave predictably and reliably. During the verification process, verification engineers can use formal methods to ensure the design requirements are FULLY met, that security functions cannot be accessed through non-secure channels, that the design will always handle any unexpected input behavior, and that there are no extra functions or back doors that the probabilistic simulation-based verification might miss.

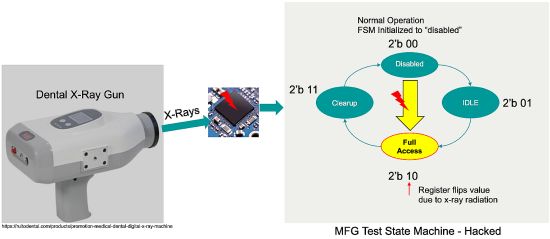

In addition, formal verification can also be used to verify the design against other types of intrusive attacks such as EMF pulses trying to force the design into an unknown state. For example, imagine a braking system contains a block of manufacture-test logic that allows full internal access, but should be flipped off and inaccessible during normal design activity. Can this logic EVER be accessed in an unexpected or inappropriate way?

A hacker could easily purchase and use a localized source of low energy x-rays, such as a small hand-held dental x-ray machine (available for as little as $500). Aimed directly at the IC, its radiation could be sufficient to cause an isolated register within the IC to flip values. Now consider an attack scenario where an IC handling test-access might be initialized to a “no access” mode at system start-up. However, a random flip of a single bit in the state-machine register could easily place the chip into an active mode (e.g. flipping a two-bit register from 00 to 10), activating the test logic – thus allowing a nefarious actor access to other restricted functions. Hardware designs can and should be built to withstand attacks like this. Formal methods can exercise all possible register upsets through fault injection techniques to ensure the design will never end up in a vulnerable state.

A hacker could easily purchase and use a localized source of low energy x-rays, such as a small hand-held dental x-ray machine (available for as little as $500). Aimed directly at the IC, its radiation could be sufficient to cause an isolated register within the IC to flip values. Now consider an attack scenario where an IC handling test-access might be initialized to a “no access” mode at system start-up. However, a random flip of a single bit in the state-machine register could easily place the chip into an active mode (e.g. flipping a two-bit register from 00 to 10), activating the test logic – thus allowing a nefarious actor access to other restricted functions. Hardware designs can and should be built to withstand attacks like this. Formal methods can exercise all possible register upsets through fault injection techniques to ensure the design will never end up in a vulnerable state.

Of course, radiation-induced bit flips such as this are not necessarily due to an attacker. They can also be faults caused by natural sources of radiation, other static discharge situations, or even electromigration within the chip itself. (For a deeper understanding of these risks, see J. Grosse, S. Marchese, “Fault Injection Made Easy,” DVCon India 2017.) The detection and ultimately correction of these faults are extremely important to both the safety and security of the automobile.

With the help of emerging standards, such as the ISO/SAE 21434, we can all better understand the challenges of cybersecurity threats. These standards and practices also help guide automotive developers to create safer and more secure cars by using secure design practices and better security verification methods. Advanced verification techniques help extend traditional practices and provide the valuable tools necessary to address the growing complexities implemented in todays ICs. The time has come to make security a top priority and embrace formal methods and other security mitigating verification techniques as a part of the vehicle development process.