Automated vehicles, also called self-driving cars or autonomous vehicles, are slowly emerging. It’s not an “all or nothing” situation. Features and technologies needed for automated driving are being developed incrementally, with some already in use. This FAQ looks at some of the technologies needed for automated driving, including various levels of “driver support features” versus “automated driving features,” sensor fusion, artificial intelligence (AI) and machine learning (ML), intra- and inter-vehicle communications, regulations, and so on.

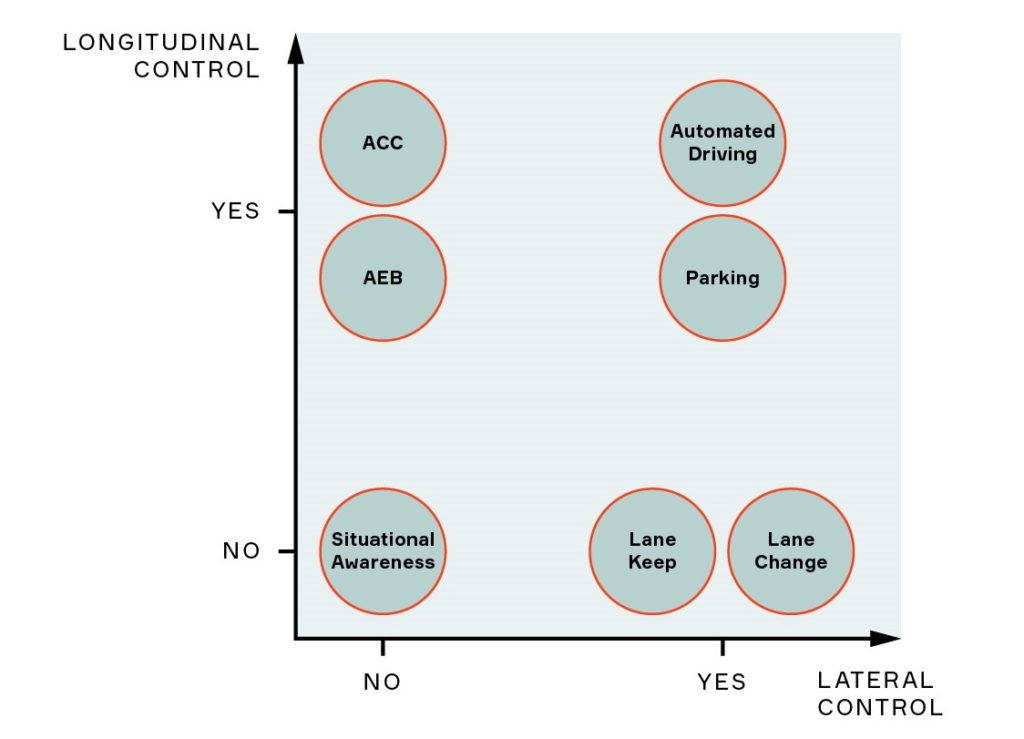

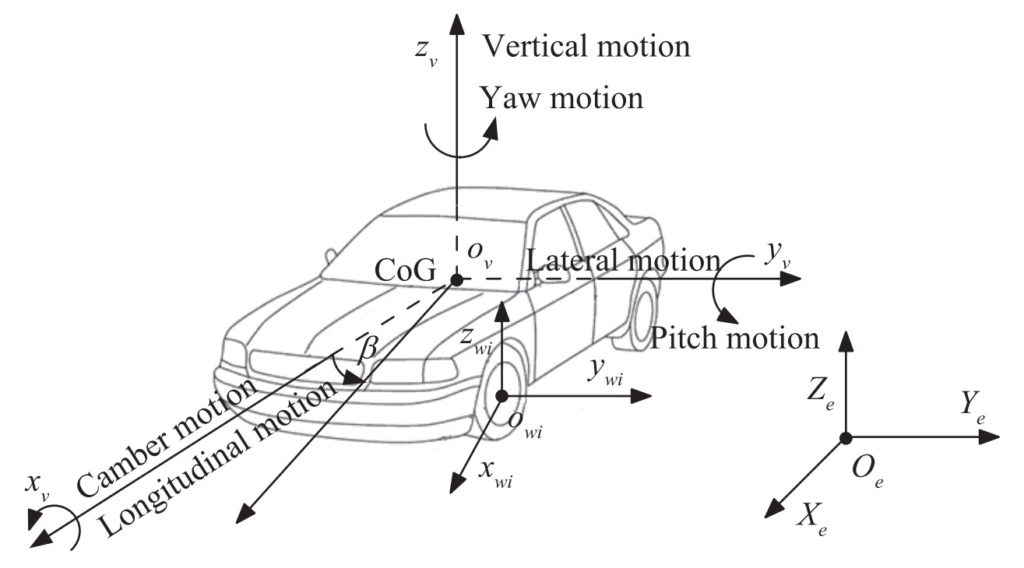

The features being developed for automated driving can be categorized by implementing various combinations of acceleration and braking (called longitudinal control), and steering (called lateral control). In addition, some features can have the same or very similar functionality but have different combinations of driver control versus automated control of the vehicle, so they are assigned to different levels of driving automation.

Rudimentary vehicle automation systems have been used for many years, such as anti-lock braking and traction control. These early systems are evolving into advanced driver assistance systems (ADAS) with more capabilities such as adaptive cruise control (ACC), automatic emergency braking (AEB), and emerging electronic stability program (ESP) with active front steering (AFS).

Successful implementation of ADAS and emerging automated driving systems requires accurate and timely information about the dynamic state of the vehicle and the environment. Currently, these onboard sensors lack the accuracy and speed needed to support automated vehicle operation. In addition, sensors such as inertial navigation sensors (INS), multi-axis inertial motion sensors (IMS), global positioning systems (GPS), and global navigation satellite systems (GNSS) are often used together. Combining the high cost of the needed sensors, the large number of sensors needed, and low precision under some extreme running conditions currently preclude the development of cost-effective systems that can obtain precise and complete vehicle dynamic state information.

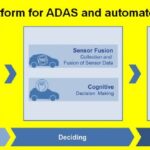

The limited availability of vehicle dynamic state information is a factor in slowing the development of more advanced ADAS features and the roll-out of automated vehicles. Work is underway to develop algorithms that can use combinations of relatively inexpensive and lower-performance sensors to provide useful levels of accuracy when estimating the vehicle’s dynamic state. Combining the inputs from multiple sensors to provide enhanced levels of information is referred to as “sensor fusion” and will be an important element in future automated vehicles.

What are the levels of automated driving?

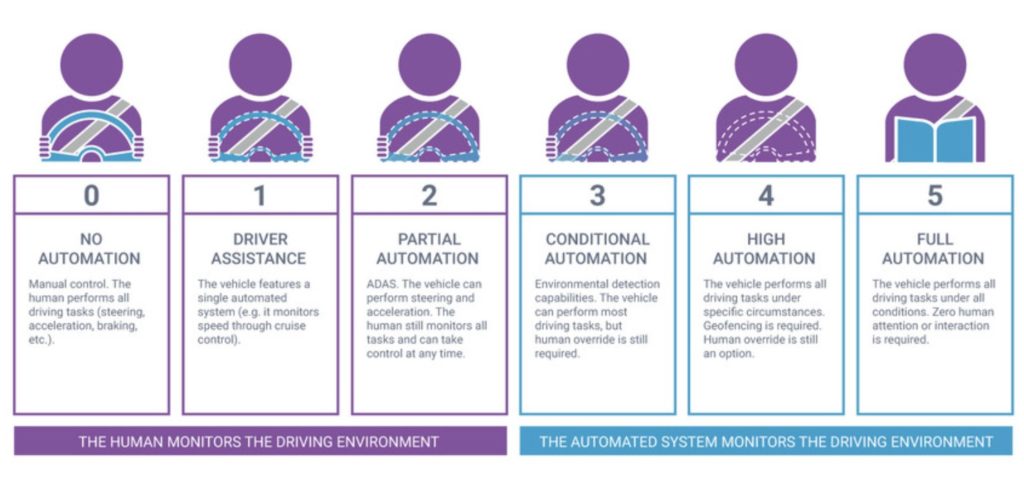

The Society of Automotive Engineers (SAE) uses a scale from zero to five to describe the various levels of automated driving. However, there are many gray areas where features might overlap. Levels 0-2 are called “driver support features” because the driver is still heavily involved with the vehicle operation, while Levels 3-5 include varying amounts of “automated driving features.”

Level 0: No Automation. The driver is solely responsible for vehicle control. There may be safety features such as backup cameras and blind-spot warnings, but the driver is in control. AEB (automatic aggressive braking when confronted with an imminent collision) is classified as a Level 0 function because it does not act over a sustained period.

Level 1: Driver Assistance. The driver is still responsible for vehicle control but can take advantage of some level of automation such as ACC, which controls acceleration and braking, typically in highway driving. Functions in Level 1 do not generally combine longitudinal and lateral controls.

Level 2: Partial Automation. Level 2 automation functions are more complex and combine lateral control (such as steering) with longitudinal control (such as acceleration and braking). Level 2 vehicles have much more complex sensor systems, but the driver is still ultimately responsible for vehicle operation.

Level 3: Conditional Automation. The driver can disengage from driving the vehicle, but only under defined circumstances such as limited ranges of vehicle speed and specific road and weather conditions. It is called conditional automation because this is the firsts real step toward fully automated vehicles, and under specific conditions, drivers are not needed to control the vehicle. However, if the conditions that enable Level 3 change, the driver is expected to be alert enough to control the vehicle. In addition, the vehicle is expected to be aware of whether or not the driver has resumed control of the vehicle. If the driver does not assume control of the vehicle, Level 3 automation is expected to bring the vehicle to a safe stop.

Level 4: High Automation. Level 4 automation requires the definition of a specific operational design domain (ODD). As long as conditions remain in the ODD, the autonomous systems are fully capable of controlling all driving functions. The vehicle is expected to alert the driver if conditions are approaching the limits of the ODD. As in the case of Level 3, if the driver does not respond and take control of the vehicle, Level 4 automation is expected to bring the vehicle into a safe condition, such as a complete stop.

Level 5: Full Automation. Level 5 automation eliminates the need for a driver and may not have any controls (steering wheel, gas, and brake pedals) to be used by a driver.

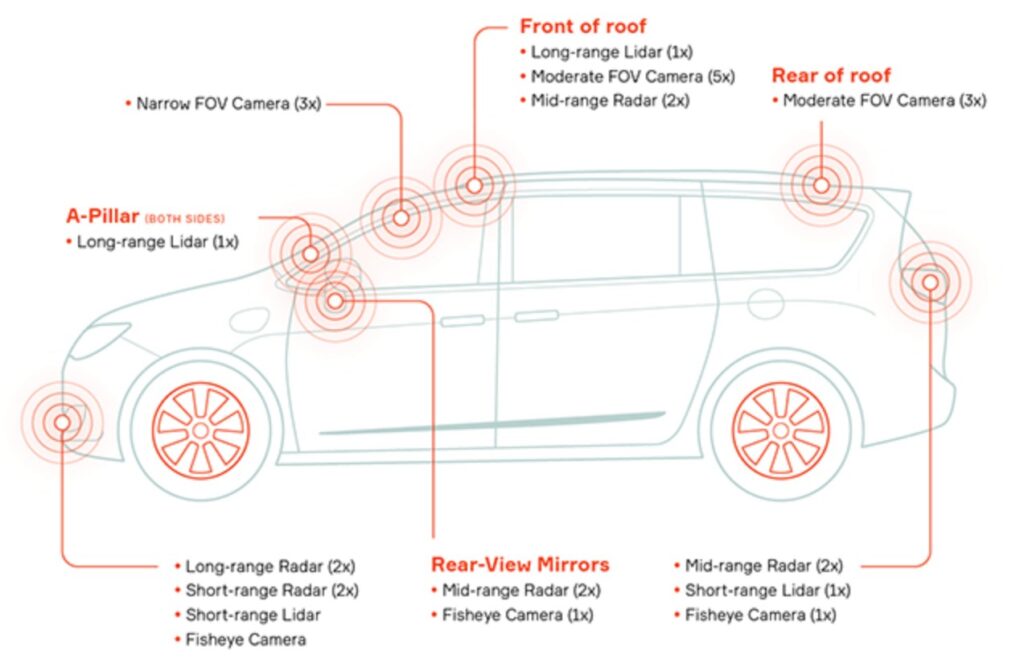

Increasing levels of automation require more and more sensors and more layers of sensors. A Level 1 vehicle may have a single camera, while a Level 5 vehicle will include a comprehensive and multi-layered sensor system to enable it to travel safely through any environment.

These SAE levels are occasionally supplemented as the technologies roll out in an uneven and dynamic manner based on marketing considerations. For example:

Level 2+: Advanced Partial Automation. This tends to be more of a marketing distinction. With Level 2+, the driver can take their hands off the wheel and glance away from the road for a moment, but not fully disengage from the responsibility for driving the vehicle. Level 2+ functions tend to have focused and very limited ODDs. Traffic jam assist (TJA) is sometimes referred to as a Level 2+ system. TJA combines adaptive cruise control and lane-centering capabilities to ease the burden of getting through clogged highways and traffic congestion. There is strong consumer interest in opportunities for drivers to take their hands off the wheel and glance away from the road ahead for a few moments, even if under very specific and limited conditions.

Level 2+ can integrate lane centering control, adaptive cruise control, and other features to allow drivers to briefly take their hands off the wheel and feet off the pedals in certain situations. Automakers see Level 2+ as an important stepping stone toward higher levels of automation. These semi-autonomous systems help drivers gain experience and confidence in automated vehicle control. The vehicle temporarily assumes control, but the driver can intervene and always remains responsible for monitoring the surroundings.

Sensors and data dominate

Development of automated vehicles is still waiting for cost-effective and accurate sensors and sensor systems. Sensor fusion will provide a partial answer to this need. But it will take more, such as vehicle-to-vehicle communications, so vehicles can share sensor and dynamic state information and extend a vehicle’s “awareness” of its surrounding beyond today’s limit of about 250 meters—vehicle-to-infrastructure communication to add information about traffic congestion, traffic lights, and signals, and changing speed limits. Vehicle-to-everything (V2X) communication will add data streams, including access to machine learning from beyond the immediate area, and will be needed to support full automation.

AI and ML will be used in an automated vehicle to process the very large and changing volumes of sensor data and make decisions in real-time. These algorithms will identify and classify the objects detected by the sensors. ML will be important in this process and will combine limited ML processes on the vehicle and more comprehensive ML processes in the cloud. There are various efforts underway to validate the safety of AI and ML algorithms and the sensor systems that will be used in automated vehicles. Finally, regulations need to be developed to create an environment that allows the use of automated vehicles. The following is a very limited sample of those activities:

IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems mission is, “To ensure every stakeholder involved in the design and development of autonomous and intelligent systems is educated, trained, and empowered to prioritize ethical considerations so that these technologies are advanced for the benefit of humanity.”

ISO/TR 9241-810:2020,“Ergonomics of human-system interaction – Part 810: Robotic, intelligent and autonomous systems” addresses, among other considerations: physically embodied robotic, intelligent and autonomous (RIA) systems, such as robots and autonomous vehicles with which users will physically interact.

UL4600 “Standard for Safety for the Evaluation of Autonomous Products” is focused on setting up a safety case for autonomous systems and providing a framework for its analysis.

ISO/PAS 21448 “Road vehicles – Safety of the intended functionality” was developed for the automotive sector and addressed the limits of meaningful usability of algorithms and sensor systems. It considers new classes of errors and functional deficiencies.

Summary

It’s still early days for the development of automated vehicles. The industry has traveled through the first three levels up to partial automation and is expanding capabilities into Level 3 systems and partial automation. A lot of work is still needed to improve the cost/performance of various sensor technologies. AI and ML algorithms need development and proving for safe and reliable performance. Vehicle awareness of the surroundings needs to extend well beyond today’s limit of 250 meters. A wide variety of international standardization initiatives and technology development efforts are currently underway to make automated vehicles a reality.

References

IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems

ISO/PAS 21448:2019 Road vehicles — Safety of the intended functionality

ISO/TR 9241-810:2020, Ergonomics of human-system interaction — Part 810: Robotic, intelligent and autonomous systems

UL 4600: Standard for Safety for the Evaluation of Autonomous Products

Vehicle Dynamic State Estimation: State of the Art Schemes and Perspectives, IEEE/CAA Journal of Automatica Sinica

What Are the Levels of Automated Driving?, Aptiv

What is an Autonomous Car? Synopsys

Leave a Reply