One of the hottest tech trends is artificial intelligence (AI) and its applications. Various sectors are using AI to solve problems and gain productivity. For instance, a school recently deployed a virtual telephone assistant, also known as a conversational agent, to help students with questions on what classes to take. Students were happy with the answers, and some were completely unaware that they were talking to a robot.

Beyond education, AI can be applied in various sectors, including supply chain, transportation, natural language processing, healthcare, factories, security, object detection, image processing, farming, entertainment, and much more. AI in the audio/video field has also made a marked difference. A California-based start-up, Babblelab, has applied AI using graphics processing unit (GPU) hardware to improve on noise cancellation. The results are remarkable — you can hear the difference before and after AI is deployed.

Designing an AI product

AI is an integration of AI algorithms and AI-based hardware. AI is computation-intensive, requiring fast processing and access to memory.

“AI can demand a great deal of computational power from a chip. It is one matter to process one to two frames per second for a facial recognition application, something which can easily be done on an MCU. It’s a totally different matter to process HD video streaming at 1024×1024, where the processor must process 50 frames a second,” commented Markus Levy, Director of AI and Machine Learning Technologies, NXP Semiconductors.

There are three different approaches to designing AI:

- Using an all-in-one GPU module

- Taking the AI chipset approach

- Just add the AI accelerators to your own host processor

Using an all-in-one GPU module

The NVIDIA CUDA-X AI computer measures 70mm x 45mm and can crank out 472 GFLOPS of compute performance, which can handle most AI or deep learning workloads. Based on the G128-core NVIDIA Maxwell architecture-based GPU and Quad-core Arm A57, this GPU machine consumes 5 watts of power.

Additionally, it supports 16 GB eMMC 5.1 Flash, Gigabit Ethernet, video, camera, and HDMI display. Perhaps its most attractive feature is the library of software-acceleration codes to make development work easier. Developers using this approach can integrate the module into their own systems with Linux for Tegra

Taking the AI chipset approach

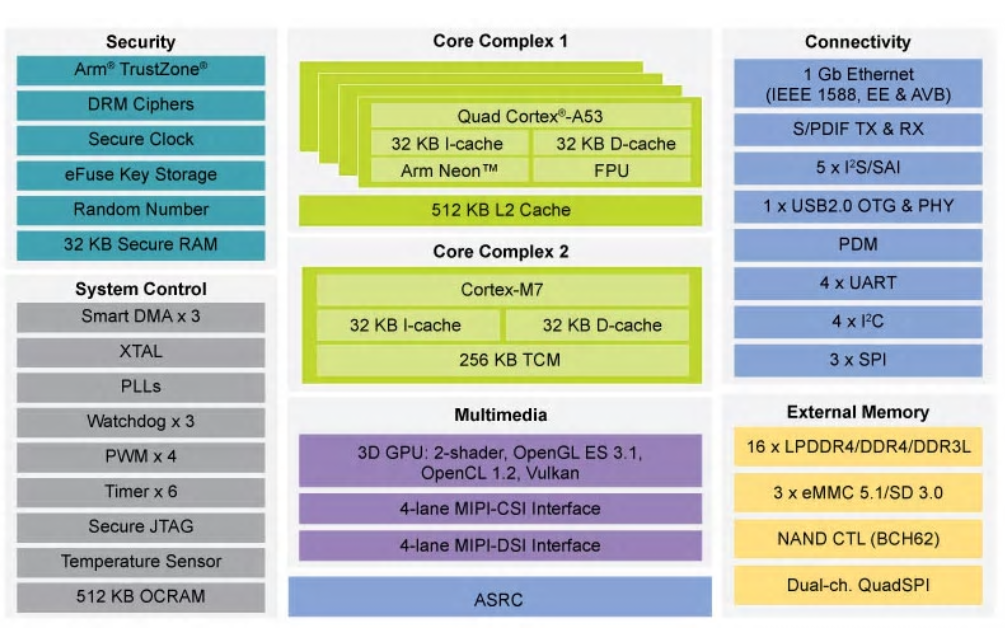

Not everyone needs a muscle car when it comes to driving. Similarly, in AI design, there are plenty of applications that need a low-cost, scalable AI chip. That is where NXP’s i.MX 8M Nano – Arm Cortex-A53, Cortex-M7 would be a good fit. This 14LPC FinFET based chip brings AI functions to many industrial and IoT applications. Uses include smart home and building automation, machine learning (i.e., face recognition and anomaly detection), test and measurement, human-machine interface (HMI), health care diagnostics, health care monitoring, and other medical devices.

For machine vision applications, the 3D GPU with OpenGL ES 3.1 and Vulkan support enables graphical UI or 3D GPU with OpenCL using all four Cortex-A53 cores to accelerate machine learning. The 14 x 14, 0.5mm package supports a 6-8 layer board design. Operating systems available are Android, Linux, and FreeRTOS. The unit operates from -40 ºC to 105 ºC. Additionally, a software library is available for AI specific applications.

Just add the AI accelerators to your own host processor

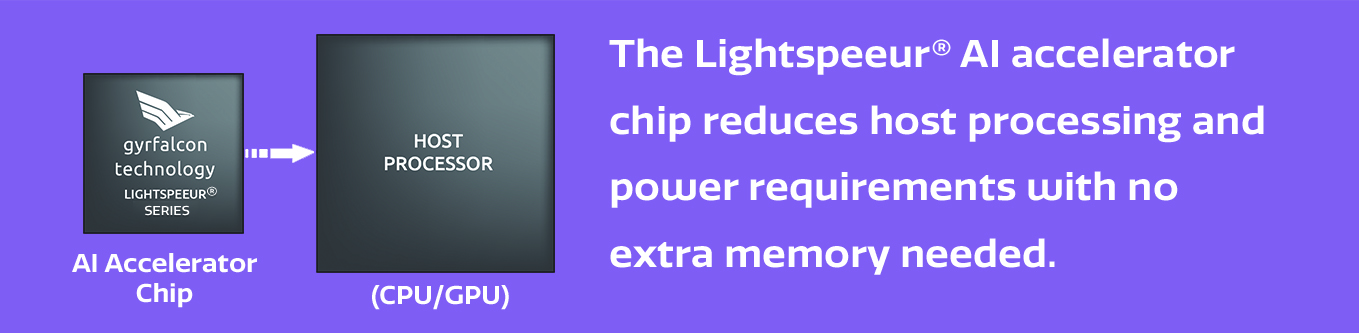

Taking a different approach, Gyrfalcon Technology, founded in 2017 in California, offers three production level AI accelerator chips using the company’s proprietary matrix processing engine, which combines the processing with memory to augment the host processor to perform audio and video processing from edge to cloud. The Lightspeeur 2801S measures 7mm x 7mm delivers 2.8 TOPS at 0.3 watts and is ideal for Edge AI applications.

There is also the Lightspeeur 2803S, which measures 9mm x 9mm and has been optimized for advanced edge or data center applications. It can be used in single-chip designs or configured in multiple chip configurations for data center applications. For example, 16 of those chips can be offered on a GAINBOARD 2803 PCIe card to deliver up to 270 TOPS consuming 28 watts for server applications. Additionally, its Lightspeeur 2802M is the first AI accelerator combining the magnetoresistive random access memory (MRAM) technology and its matrix processing design to form an MRAM AI engine. This can be used in video security monitoring, autonomous vehicles, robotics, and other AI applications. Gyrfalcon’s approach helps developers to increase the AI computation power without replacing host processors (CPU, GPU, etc.).

Conclusion

The three approaches described here offer unique solutions to design for AI. Using the less than $100 NVIDIA module approach developers can integrate the unit in the OEM system. With the NXP AI chip priced at under $10, developers have more flexibility, but also more PCB development and layout work. Finally, the Gyrfalcon accelerator chip solution means adding another chip to the existing processor design, and it can come in handy for developers with legacy designs already in place. Now you can take your pick.

Leave a Reply