Special test programs hope to help robotic systems make better decisions in short order.

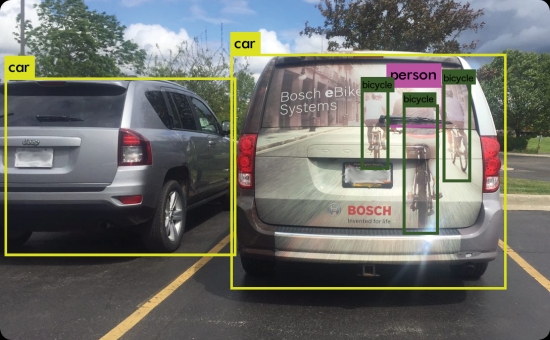

Here’s a riddle: When is an SUV a bicycle? Answer: When it is a picture of a bicycle that is painted on the back of an SUV, and the thing looking at it is an autonomous vehicle.

Edge cases like this one are the reason the Rand Corp. reported in 2016 that autonomous cars would need to be tested over 11 billion miles to prove that they’re better at driving than humans. With a fleet of 100 cars running 24 hours a day, that would take 500 years, Rand researchers say.

It’s not just of scenes painted on the back of vehicles that throw autonomous vehicles for a loop. “There are a lot of edge cases,” says Danny Atsmon, the CEO of autonomous vehicle simulation firm Cognata Ltd. “The classic example is that of driving at night after a rain. The pavement can be like a mirror, so you see a car and its reflection. Autonomous systems can interpret the scene as two different cars.”

Cognata, based in Israel, has a lot of experience with edge cases because it builds software simulators in which automakers can test autonomous-driving algorithms. The simulators allow developers to inject edge cases into driving simulations until the software can work out how to deal with them. This all happens in the lab without risking an accident.

“It can take months to hit an edge-case scenario in real road tests. In a simulation that’s not a problem,” says Atsmon.

Simulations like those that Cognata devises are also helpful because of the way autonomous systems recognize situations unfolding around them. Traditional object recognition techniques such as edge detection may be used to classify features such as lane dividers or road signs. But machine learning is the approach used to make decisions about what the vehicle sees.

To help developers of automated vehicle systems, Cognata recreates real cities such as San Francisco in 3D. Then it layers in data such as like traffic models from different cities to help gauge how vehicles drive and react. The simulations are detailed enough to factor in differences in driving habits of people in different cities, Atsmon says. The third layer of Cognata’s simulation is the emulation of the 40 or so sensors typically found on autonomous vehicles, including cameras, lidar and GPS. Cognata simulations run on computers that the auto manufacturer or Tier One supplier provides.

Sensor emulation is particularly important because autonomous cars overcome issues such as baffling images by fusing together information gathered from different types of sensing. Just as cameras can be fooled by images, lidar can’t sense glass and radar senses mainly metal, explains Atsmon. Autonomous systems learn to deal with complex situations by gradually figuring out which data can be used to correctly deal with particular edge cases.

The “obvious” answer is to have the image recognition software consider the “speed” of the object it “thinks” it is seeing. For example, the RV with the “building” is actually travelling at the speed of the rest of the traffic. A building moving at traffic speed is NOT a building. The same can be said for the two bikes pictured on the back of the other van. Another check could be to evaluate the relative position of the “building” to the edges of the truck. Again, a moving building is not a a “real” building.

OK, so now let’s talk about the reflection on wet pavement of the car in front of you. The reflection moves at the same speed as the real object. So much for considering the speed of the object the software thinks it is seeing. Situations like this show why everybody in the autonomous vehicle community is preaching sensor fusion and machine learning. Otherwise there are about a million special cases you have to think about in advance in order to avoid confusion.