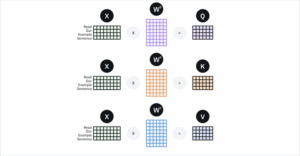

Transformers are a neural network (NN) architecture, or model, that excels at processing sequential data by weighing the importance of different parts of the input sequence. This allows them to capture long-range dependencies and context more effectively than previous architectures, leading to superior performance in natural language processing (NLP) tasks like translation and in computer […]

Microcontroller engineering resources, new microcontroller products and electronics engineering news