by John A. Carbone

Real-time embedded systems are expected to perform with predictable, real-time responsiveness. But in some cases, the functionality that enables that responsiveness can actually bloat the system and challenge its ability to perform as needed. With preemption-threshold scheduling, developers can reduce preemption overhead, while still enabling applications to meet real-time deadlines.

The importance of threads

Before addressing preemption-threshold scheduling, some basic RTOS features must be understood. An RTOS is system software that provides services and manages processor resources such as processor cycles, memory, peripherals, and interrupts for applications. The RTOS allocates processing time among the various duties the application must perform by dividing the software into pieces, commonly called tasks or threads, and creating a run-time environment that provides each thread with its own virtual microprocessor (“multithreading”). Each of these virtual microprocessors consists of a virtual set of microprocessor resources, e.g., register set, program counter, stack memory area, and a stack pointer. Only the executing thread uses the physical microprocessor resources, but each thread operates as if it is manipulating its own private resources (the thread’s “context”).

To gain real-time responsiveness, the RTOS controls thread execution. Each thread is given a priority by the designer, to control which thread should run if more than one is ready and not blocked. When a higher-priority thread (compared to the running thread) needs to execute, the RTOS saves the currently running thread’s context to memory and restores the context of the new high-priority thread. The process of swapping the execution of threads is commonly called context switching.

This transfer of control to another thread is a fundamental benefit of an RTOS. Instead of embedding processor-allocation logic inside the application software, it’s done externally by the RTOS. This arrangement isolates the processor-allocation logic and makes it much easier to predict and adjust the run-time behavior of the embedded device.

In order to provide real-time responsiveness, an RTOS must offer preemption, which lets the application switch to a higher-priority thread instantly and transparently without waiting for completion of a lower-priority thread. (An operating environment that doesn’t support preemption is effectively just another variation on the legacy polling-loop technique found in simple, unconnected devices.) Preemptive scheduling of threads guarantees that critical threads get immediate attention so they can meet their real-time deadlines. But preemptive scheduling can result in significant context-switch overhead under certain conditions, which wastes processor cycles and challenges real-time responsiveness.

The impact of context-switching

Threads can have a number of states:

- READY – the thread is ready to run, but is not currently executing instructions

- RUNNING – the thread is executing instructions

- SUSPENDED – the thread is waiting for something such as a message in a queue, a semaphore, a timer to expire, etc.

- TERMINATED – the thread has completed its processing and is not eligible to run

Threads are assigned priorities, which indicate their relative importance and the order in which they will get access to the CPU if they all were READY to run. Generally, priorities are integer values, 0-to-N, either 0-high or 0-low. Each thread is assigned a priority, and the priority can be changed dynamically. Multiple threads can be assigned the same priority, or they can each be assigned a unique priority.

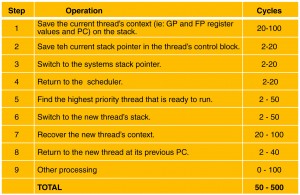

When one thread is executing and a different thread of higher priority becomes READY to run, the RTOS preempts the running thread and replaces it with the higher-priority thread using a context switch. In a context switch, the RTOS saves the context of the executing thread on its stack, retrieves the context of the new thread, and loads it into the CPU registers and program counter, switching the executing thread’s context from one to the other. (See Table 1.)

The context-switch operation is reasonably complex and can take from 50 to 500 cycles depending on the RTOS and the processor. This is why care must be taken to optimize context-switching operations, and to minimize the need for these operations. This is the goal of preemption-threshold scheduling.

Scheduling and loops

Applications that don’t use an RTOS but are comprised of more than one operation or function—essentially a task or thread—must provide a mechanism for running whichever function needs to run. A simple sequential loop might be used, or more sophisticated loops that check status to determine whether a function has work to do, skipping those that don’t, and running those that do. These loops are forms of schedulers, but they tend to be inefficient and unresponsive, especially as the number of threads or functions grows larger. In contrast, an RTOS scheduler keeps track of which activity to run at any point in time.

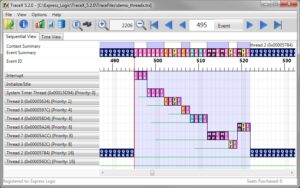

Generally, real-time schedulers are preemptive—that means they make sure that the highest-priority thread that is ready to run is the one they let run, and the others wait. RTOS schedulers can also perform round-robin scheduling, which is similar to the Big Loop—a more sophisticated form of round-robin where individual threads are granted a certain percentage of CPU time rather than allowed to run to completion or voluntary suspension. The RTOS scheduler performs context switches when required, and enables threads to sleep, to relinquish their CPU use, or to terminate and leave the pool of threads awaiting the CPU. See Figure 1 for an example of thread preemption in Express Logic’s ThreadX RTOS, as shown in the TraceX analysis tool.

Multithreading is a term that indicates that the CPU is being shared by more than one thread. In these systems, when a thread reaches a roadblock it yields the CPU to other threads that are not waiting for anything rather than continuing to check for the ability to continue. This makes much more efficient use of otherwise-wasted CPU cycles. An example is a simple system with two threads: thread_a and thread_b. If thread_a is running and initiates an I/O operation that may take hundreds of cycles to complete, rather than just waiting in a polling loop, thread_a can be suspended until the I/O is complete, and thread_b can be allowed to use the CPU until that time. This involves a context switch. Once the I/O is complete, thread_a is resumed. Multithreading enables more efficient use of CPU resources, compared to Big-Loop and other non-preemptive scheduling approaches.

Preemption challenges

Preemption is the process in which a running thread is stopped so that another process can run. This can be the result of an interrupt, or an action of the running thread itself. In preemptive scheduling, the RTOS always runs the highest-priority thread that is READY to run. Generally, the running thread’s context is saved, and the context of another thread is loaded in its place so the new thread can run. Preemptive scheduling is commonly found in real-time systems and RTOSes because it provides the fastest response to external events, where a thread must run immediately when an event occurs, or where it must run before a particular deadline. While responsiveness is maximized, overhead is high, since a context switch always is required.

Preemption carries potential challenges, which the developer must avoid or address:

- Thread starvation. This occurs when a thread never gets to execute because a higher-priority thread never finishes. Developers should avoid any condition in which a high-priority thread might end up in an endless loop, or where the thread consumes an undesirable amount of CPU time, preventing other threads from accessing the processor.

- Overhead. In situations with lots of context switching, overhead can add up with significant performance impact.

- Priority inversion. This occurs when a high-priority thread is waiting for a shared resource, but the resource is held by a low-priority thread which cannot finish its use of the resource due to preemption by an intermediate-priority thread.

Preemption-Threshold Scheduling (PTS)

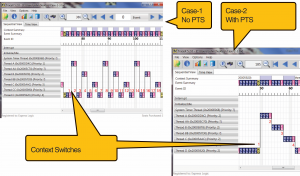

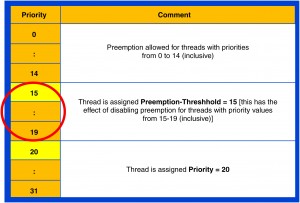

With preemption-threshold scheduling, a priority must be exceeded in order to preempt a thread. (Figure 2) Preemption-threshold scheduling prevents some preemptions, which eliminates some context switches and reduces overhead.

Normally, any thread with a priority higher than the running thread can preempt it. But with preemption-threshold scheduling, a running thread can only be preempted if the preempting thread has a priority higher than the running thread’s preemption threshold. In a fully preemptive system, the preemption threshold would be equal to the thread’s priority. By setting the preemption threshold higher than the thread’s priority, preemptions by threads with priorities in between those two values will not be permitted.

In the example in Table 2, a thread of priority 20 would normally be preemptable by a thread of priority 19, 18, 17, 16, and so on. But, if its preemption threshold were set to 15, only threads higher in priority than 15 (lower in number), could preempt the thread. So, threads in between—at priority 19, 18, 17, 16, and 15—cannot preempt, but threads at priority 14 and higher (lower number) can. Preemption threshold is optional, and can be specified for any thread, all threads, or no threads. If not specified, effectively, the preemption threshold of a thread is anything higher than its priority. But with preemption threshold, a thread can prevent preemption by higher priority threads, up to some limit, above which preemption will be permitted.

A fully preemptive scheduler can introduce significant overhead that reduces system efficiency. Preemption-threshold scheduling, on the other hand, can reduce context switches, and enable increased performance.

Express Logic, Inc.

www.expresslogic.com

What are some of the strategies that could be used to reduce preemptive overheads?