An AI agent, combined with a model context protocol (MCP), can create more contextual AI systems that can perform a wide range of tasks, from automating processes to providing insights and interacting with users. MCP helps AI agents connect to data sources, enabling them to access information, collaborate with other systems, and make decisions based on real-time data.

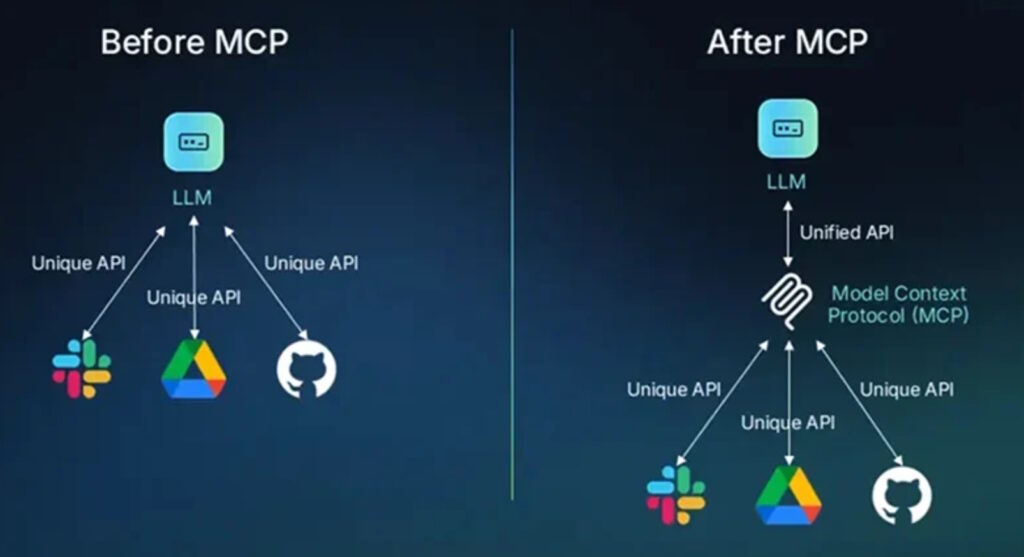

MCP is an open-source protocol that AI models, such as Claude, Llama, and GPT, can use to interact with external data sources and tools. MCP is often compared with conventional application program interfaces (APIs). But there are significant differences (Figure 1).

- APIs are general-purpose tools that allow different software applications to interact with and exchange data.

- MCP was designed to provide a standard interface for agentic AIs and large language models (LLMs) to interact independently with external tools, data sources, and multiple APIs.

MCP benefits

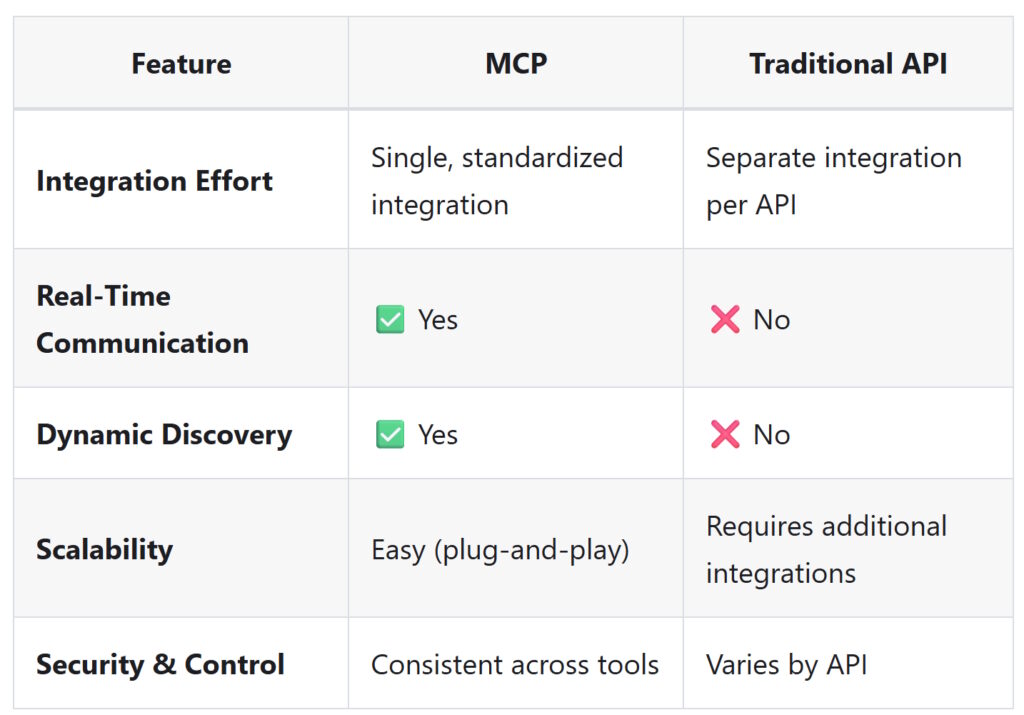

Instead of using multiple APIs, an AI can integrate one MCP and access multiple tools and services, not just one. MCP supports dynamic discovery and interaction with available resources without prior information. MCP also enables predictable and consistent real-time two-way communication that can be dynamically initiated and controlled.

Using MCP, AI agents can utilize external data sources to add context and generate responses that are more relevant to specific situations. MCP also enables AI agents to perform actions like updating a database, running a script, or sending a message. Finally, MCP is scalable, and as a single interface, it can enable the implementation of enhanced security and control (Table 1).

MCP architecture

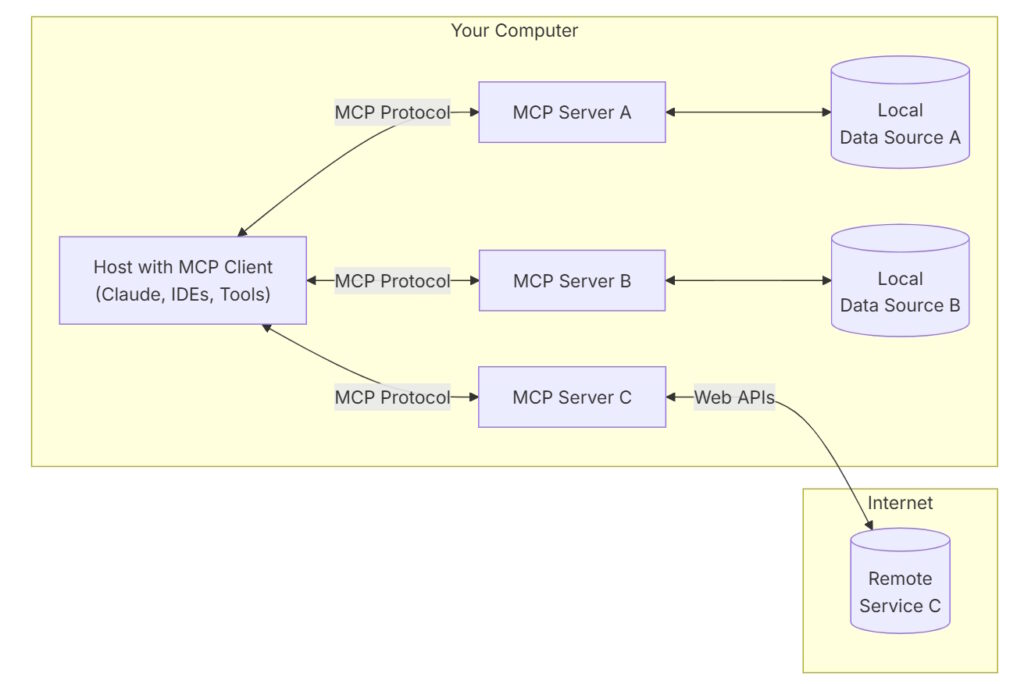

MCP is a client-server architecture. The AI applications are the clients that can connect to multiple MCP servers. MCP provides a universal interface between tools and external resources that can be accessed by hosts or MCP clients like Claude, Llama, GPT, and so on.

The host coordinates overall system operation and AI interactions. MCP clients maintain one-to-one stateful connections with the servers for context retention. MCP clients are also responsible for message routing, monitoring, and managing the tools and resources available on connected servers. During initialization, clients negotiate protocol versions to ensure compatibility between the host and server.

The servers are lightweight programs that consume few resources and provide secure connectivity to resources, such as databases. Server elements include tools, resources, and prompts that can reside locally or be remote.

Tools are model-controlled functions and can be automatically discovered and invoked in real-time based on context, while resources and prompts are user-controlled. Tools are executable functions that hosts can use to interact with external resources. They can also be API calls to external services like email.

Resources include any data sources or files that can provide additional context. Prompts are pre-defined templates that guide AI model interactions.

Under the hood

The actual protocol is composed of several elements. Its core consists of JavaScript object notation remote procedure call (JSON-RPC) message types that are simple and easy for developers to use.

A second foundational element is lifecycle management, which consists of client-server connection initialization, capability negotiation, session control during the operational phase, and the graceful termination of connections.

Three additional elements are implemented as needed by specific applications:

- Transport mechanisms that describe how clients and servers exchange messages typically consist of standard input/output (STDIO) for local servers and server-sent events (SSE) for hosted servers.

- Server features include available resources, prompts, and tools that the server exposes.

- Client features include directory lists provided by clients.

MCP security – user beware

MCP only defines how AI applications and external systems can interact; it does not require specific security measures. It’s up to developers to identify and implement the needed security.

The latest release of the MCP specification addresses some security concerns with standardized authorization using OAuth 2.1, introducing a mandatory proof key for code exchange (PKCE) protection for all clients, not just public ones, to prevent authorization code interception attacks.

Summary

MCP provides a single interface that AI agents and LLMs can use to connect with multiple external resources, creating more contextually aware applications and enhancing user interactions. It utilizes a scalable client-server architecture that can connect to both local and remote resources. Security is evolving and improving, but developers must still take the lead in ensuring safe and secure operations.

References

AI Model Context Protocol (MCP) and Security, Cisco

Get started with the Model Context Protocol (MCP), modelcontextprotocol.io

How to Use Model Context Protocol the Right Way, Boomi

Introducing the Model Context Protocol, Anthropc

MCP vs Traditional APIs, Ailoitte Technologies

The New Model Context Protocol for AI Agents, Evergreen

What is Model Context Protocol (MCP), Composio

What is Model Context Protocol (MCP)? How it simplifies AI integrations compared to APIs, Norah Sakal

EEWorld related content

How can agentic AI be used in autonomous systems like EVs?

How does the open domain-specific architecture relate to chiplets and generative AI?

How does embedded software work?

How to avoid common software engineering errors

What’s special about embedded microcontrollers?

Leave a Reply