IMUs are just as important as cameras and radar when it comes to operating autonomous vehicles reliably.

Mike Horton, Aceinna Inc.

AN inertial measurement unit (IMU) is a device that directly measures a vehicle’s three linear acceleration components and three rotational rate components (and thus its six degrees of freedom). An IMU is unique among

the sensors typically found in an autonomous vehicle (AV) because an IMU needs no connection to or knowledge of the external world. This environment independence makes the IMU a core technology for both safety and sensor-fusion.

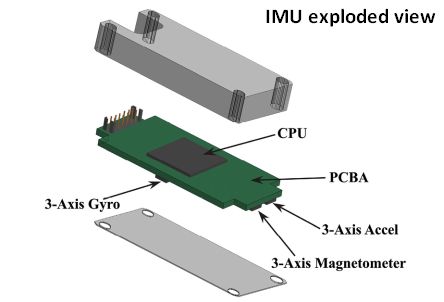

The typical IMU for autonomous vehicle use includes a three-axis accelerometer and three-axis rate sensor. Those that are 9-DOF (degrees of freedom) units include a three-axis magnetometer. But a true IMU is just 6-DOF. A magnetometer is not particularly useful in automotive apps due to a vehicle’s local magnetic field and fields of nearby cars and trucks.

A self-driving car requires many different sensing technologies. For example, it typically needs Lidar to create a precise 3D image of the local surroundings, radar in a different part of the spectrum for ranging targets, cameras to read signs and detect colors, high-definition maps for localization, and more. Unlike the IMU, each of these technologies interacts with the external environment to send data back to the software stack for localization, perception, and control.

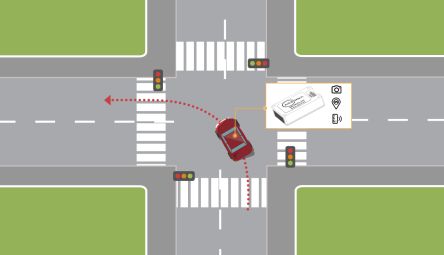

The IMU helps provide “localization” data i.e., information about where the car is. Software implementing driving functions then combines this information with map and “perception stack” data that tell the car about objects and features around it.

The perception stack is part of what’s called the AV stack. The AV Stack is basically the brains behind autonomous vehicles. It is a collection of hardware and software components consolidated into a platform that can handle end-to-end vehicle automation. The AV stack includes perception, data fusion, cloud/OTA, localization, behavior (a.k.a. driving policy), control and safety.

The system engineer must consider every scenario and always have a backup plan. Failure Mode Effects Analysis (FMEA) formalizes this requirement into design requirements for risk mitigation. FMEA will ask questions like, what happens if the Lidar, radar, and cameras all fail simultaneously? An IMU can dead-reckon for a short period of time, meaning it can briefly determine full position and attitude independently. IMUs used for AV applications typically have uncertainties of much less than 1 mG (10-3 G) for their accelerometers and much less than 10°/hr for their rate sensors. In terms of position, this means the IMU can track for 10 sec to about 30 cm or better.

An IMU alone can slow the vehicle in a controlled way and bring it to a stop, providing the best practical outcome in a bad situation. While this may seem like a contrived requirement, it turns out to be fundamental to a mature safety approach.

An accurate IMU can also determine and track attitude precisely. When driving, the direction or heading of the vehicle is as crucial as its position. Driving in a slightly wrong direction even briefly may put the vehicle in the wrong lane. Dynamic control of the vehicle requires sensors with dynamic response. An IMU does a nice job of tracking dynamic attitude and position changes accurately. Its fully environment-independent nature lets an IMU track position even in tricky scenarios such as slipping and skidding where tires lose traction.

A precise attitude measurement is often useful as an input for other algorithms. While Lidar and cameras can be useful in determining attitude, GPS is often almost useless. Finally, a stable independent attitude reference has value in calibration and alignment.

It turns out humans who are not distracted or drunk are typically not bad at driving. A typical driver can hold position in a lane to better than 10 cm. This is actually quite tight. If an autonomous vehicle wanders in its lane, it will appear to be driven by a bad driver. And wandering out of a lane during a turn could easily result in an accident.

The IMU is a key dynamic sensor to steer the vehicle dynamically, maintaining better than 30-cm accuracy for short periods when other sensors go offline.

The IMU is also used in algorithms that can cross-compare position/location and then assign a certainty to the overall localization estimate. Without the IMU, it may be impossible to know when the location error from a Lidar has degraded.

Tesla is famous for its “No Lidar Required” autopilot technology. In systems lacking Lidar, a good IMU is even more critical because camera-based localization of the vehicle will experience more frequent periods of low-accuracy depending on the external lighting and what is in the camera scene.

Camera-based localization uses SIFT (scale invariant feature transform) tracking in the captured images to compute attitude. Briefly, SIFT recognizes an object in a new image by individually comparing each of its features to reference images in a database and matching features based on the Euclidean distance (ordinary straight line) of their feature vectors.) If the camera is not stereo (often the case), inertial data from the IMU itself is also a core part of the math to compute the position and attitude.

The combination of high-accuracy Lidar and high-definition maps is at the core of the most advanced Level 4 self-driving approaches such as those tested by Cruise and Waymo. In these systems, Lidar scans are matched to the HD map in real-time using convolutional signal processing techniques. Based on the match, the system estimates the precise location of the vehicle and its attitude. This process is computationally expensive. While we all like to believe the cost of computing is vanishingly small, it simply is not that cheap on a vehicle. The more accurately the algorithm knows its initial position and attitude, the less computation necessary to compute the best match. In addition, use of IMU data minimizes the risk of the algorithm getting stuck in a local minimum of HD map data.

In today’s production vehicles, GPS systems use low-cost single-frequency receivers. This makes the GPS accuracy essentially useless for vehicle automation. However, low-cost multi-frequency GPS is on the way from several silicon suppliers. Additionally, network-based correction schemes such as RTK and PPP can provide GPS fixes to centimeter-level accuracy under ideal conditions. But bridges, trees, and buildings can degrade the accuracy of these techniques. GPS reliability improves through use of high-accuracy IMUs at a low-level in the position scheme.

In that regard, navigation systems similar to those long used in aircraft and ships will likely find use in AVs. GPS/INS (inertial navigation systems) techniques use GPS satellite signals to correct or calibrate data from an inertial navigation system. The INS uses a computer, accelerometers, gyroscopes, and occasionally magnetometers to continuously calculate by dead reckoning the position, the orientation, and the velocity of the vehicle. INS tend to drift with time and must calculate angular position by integration of the angular rate from the gyros, also incurring some error that the GPS data corrects.

It turns out that production automobiles already have anywhere from one-third of an IMU to a full IMU on board. Vehicle stability systems rely heavily on a Z-axis gyro and lateral X-Y accelerometers. Roll-over detection relies on a gyro mounted with its sensitive axis in the direction of travel. These sensors have been part of vehicle safety systems for over a decade. The only problem is that the sensor accuracy is typically too low to be of use for AV purposes.

So there is an argument for upgrading the vehicle to a high-accuracy IMU which can help it drive autonomously. The main barrier for this idea has been cost. At Aceinna, we are using proprietary manufacturing techniques to get the cost down. This has let us go into high-volume production of IMUs for self-driving tractors. Thus it is natural to think AVs could be the next area to benefit from these versatile sensors.

Leave a Reply