LiDAR systems and ToF techniques are critical to providing self-driving cars with a detailed picture of the surrounding and is used in many research applications as well.

Many of the major automobile vendors, along with well-known non-auto companies such as Google, are devoting major resources to developing autonomous vehicles (often called “self-driving cars”). These vehicles obviously need to see where they are going. Doing so requires more just a photo-like flat “snapshot” of the surroundings, and even using two cameras in a “stereo” mode would also fall far short in providing the image detail, resolution, and precision needed. Instead, these vehicles need a detailed, quantifiable three-dimensional (3D) picture of their surroundings.

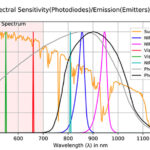

The solution is not to use conventional radar, optical, or ultrasound detection and ranging with a basic transmit/receive echo arrangement (recall that radar is an acronym for radio detection and ranging). Instead of RF or acoustic wavelengths with their limited resolution, LiDAR (sometimes written as lidar, or even LIDAR) uses laser- or LED-generated pulses of light. Based on extremely accurate measurements of the time for any reflections to be received, and using a technique called “time of flight” (ToF) ranging, the system can determine what’s in front of and around the vehicle.

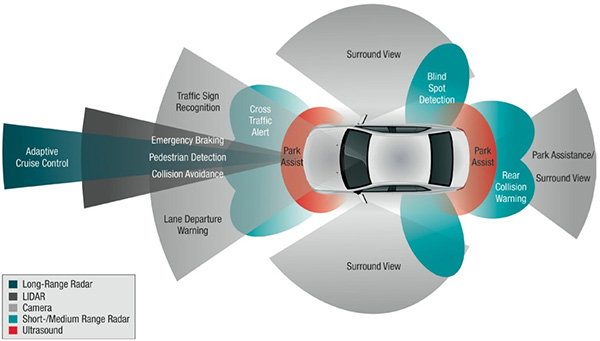

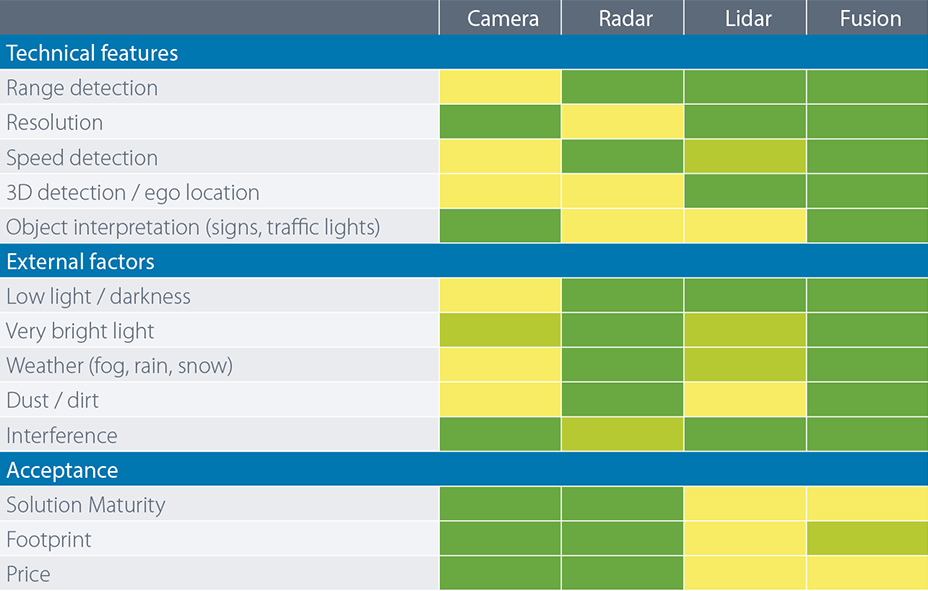

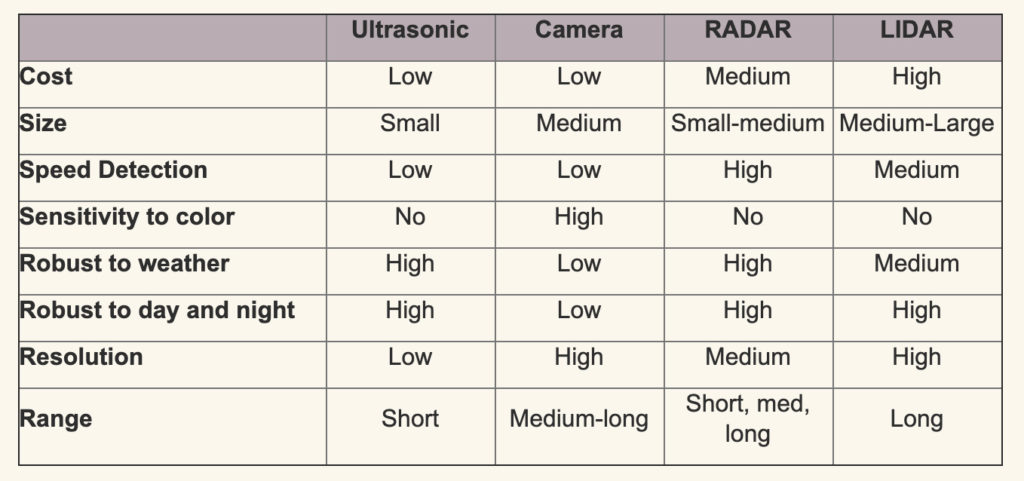

The use of LiDAR in cars is not the complete solution to seeing what’s around the vehicle. Autonomous and even semi-autonomous ones need to combine multiple sensing technologies to do what a human driver can do (Figure 1), and the various technologies overlap and complement each other (Figure 2 and Figure 3). The technical reality is that a vehicle needs LiDAR to meet Level 3 of the six-level autonomous-driving scale (previously five-level scale ) as defined by SAE J3016 standard “Levels of Driving Automation”.

Note that LiDAR is not just for autonomous vehicles, with their relatively short-distance imaging needs and modest speeds. It was originally developed several decades ago for applications such as undersea mapping and aircraft/satellite-based geophysical mapping systems. In these cases, it is used to create high-resolution images of the Earth’s surface and structures, to measure ocean levels and waves, and even for guidance in spacecraft docking. It is also used in robotics applications, safety zone monitoring in industrial machinery, traffic monitoring, and security applications.

This article focuses on at the basics of a LiDAR system with an emphasis on automotive designs. It will also look at photon emitters and sensors for LiDAR. It will not discuss the image-processing algorithms which are so critical to making sense of the LiDAR-provided data to create an analog to the human eye, image processing, and decision-making process.

In addition, LiDAR’s adoption to mass-market designs such as cars is having the often-seen beneficial back-and-forth benefit for older, existing applications. On one side, automotive LiDAR is benefiting from the huge base of non-auto experience and understanding developed over the decades. At the same time, the mass market is driving improvements basic components ranging from optical/electronic devices to image processors and their algorithms, and many of these advances are working back into the scientific and instrumentation areas.

Part 2 of this article will look at the basic operation of a LiDAR system.

EE World References

- Autonomous vehicle sees with LiDAR eyes

- A better way to measure lidar

- How can picture-like images be obtained from LiDAR?

- Doppler Lidar for Autonomous Cars Excels in Real-World Tests

- At Autonomous Vehicle Sensors Conference: Upstart Lidar Companies Face Off

- GaN FET driver excels at solid-state light detection and LiDAR apps

- What advanced sensing techniques are used to find lost treasures? Part 5: LiDAR

- The Future Of LIDAR For Automotive Applications

- LiDAR: How The Next Generation Of Radar Mapping Works

- Vacuum tubes we still (have to) use: The photomultiplier tube, Part 1

- Vacuum tubes we still (have to) use: The photomultiplier tube, Part 2

External References

- Microchip Technology, “The LiDAR Market is Fast Growing, Fragmented — and Full of Promise”

- IEEE Spectrum, “Lidar-on-a-Chip: Scan Quickly, Scan Cheap”

- Tech Briefs, “Real-Time LiDAR Signal Processing FPGA Modules”

- NASA, “A Survey of LIDAR Technology and its Use in Spacecraft Relative Navigation”

- Laser Focus World, “Lidar advances drive success of autonomous vehicles“

- DARPA, “SWEEPER Demonstrates Wide-Angle Optical Phased Array Technology”

- NOAA, “What is LIDAR?”

- Terabee, “Time of Flight principle”

- Terabee, “A Brief Introduction to Time-of-Flight Sensing: Part 1 – The Basics”

- AMS AG, “Time-of-Flight Sensing”

- All About Circuits, “How Do Time of Flight Sensors (ToF) Work? A Look at ToF 3D Cameras”

- Texas Instruments, “ToF-based long-range proximity and distance sensor analog front end (AFE)”

- Texas Instruments, “Time of flight (ToF) sensors”

- Texas Instruments, SBAU305B, “Introduction to Time-of-Flight Long Range Proximity and Distance Sensor System Design”

- Texas Instruments, OPT3101 Data Sheet

- National Center for Biotechnology Information, S. National Library of Medicine, ”A Fast Calibration Method for Photonic Mixer Device Solid-State Array Lidars”

- Yu Huang, “LiDAR for Autonomous Vehicles”

- Geospatial World, “What is LiDAR technology and how does it work?”

- American Geosciences Institute, “What is Lidar and what is it used for?”

- National Ecological Observatory Network (NEON), “The Basics of LiDAR – Light Detection and Ranging – Remote Sensing”

- Hamamatsu Photonics, “Photodetectors for LIDAR”

Leave a Reply