This three-part FAQ series began by considering “Memory Basics – Volatile, Non-volatile and Persistent.” Part two looked at “Memory Technologies and Packaging Options.” Recently developed persistent memory technologies, three-dimensional packaging for memories, advanced multicore processors, demands to process larger and larger datasets and databases, and artificial intelligence are driving the emergence of a new category of data center architectures referred to as memory-centric computing. Memory centric computing is a broad concept. This FAQ considers several examples of emerging memory-centric architectures developed by various vendors.

In-memory computing

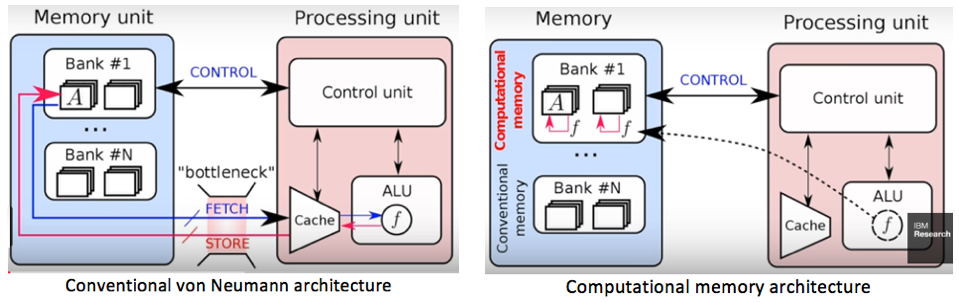

In-memory computing has been used in large-scale database applications for several years. The earlier forms of in-memory computing were based on conventional von Neumann computer architectures. And while they were an improvement over earlier systems, they did not get the maximum benefit from in-memory computing. More recently, new “computational computing” architectures and so-called “big memory computing” software have been introduced.

A research team from IBM Research developed a computational memory architecture (pictured above) that is expected to speed up processing by 200 times with greater energy efficiency. IBM’s computational memory architecture eliminates most of the data transfers between memory and CPU. It is aimed at artificial intelligence applications and enables ultra-dense massively-parallel operation.

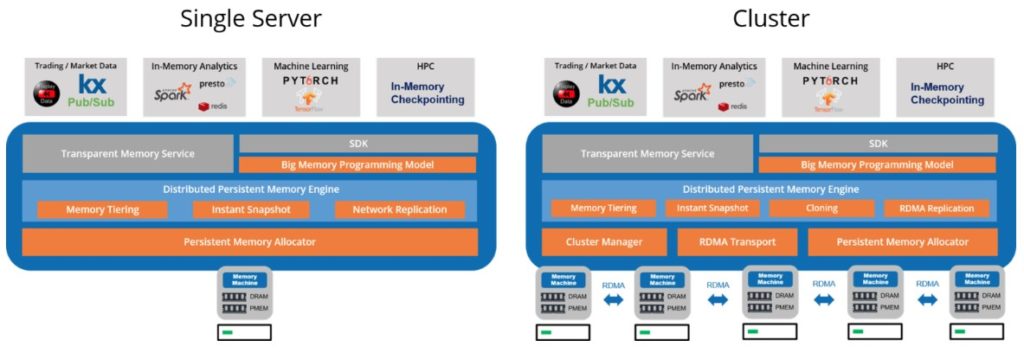

Earlier this year, MemVerge announced its Memory Machine™ software to support big memory computing. Big memory computing is the combination of DRAM, persistent memory, and MemVerge’s Memory Machine software technologies, where the memory is abundant, persistent, and highly available.

Memory Machine is a Linux-based software subscription deployed on a single server or in a cluster. Once DRAM and persistent memory are virtualized, Memory Machine can set-up DRAM as a “fast” tier for persistent memory and provide enterprise-class data services including snapshots, replication, cloning, and recovery.

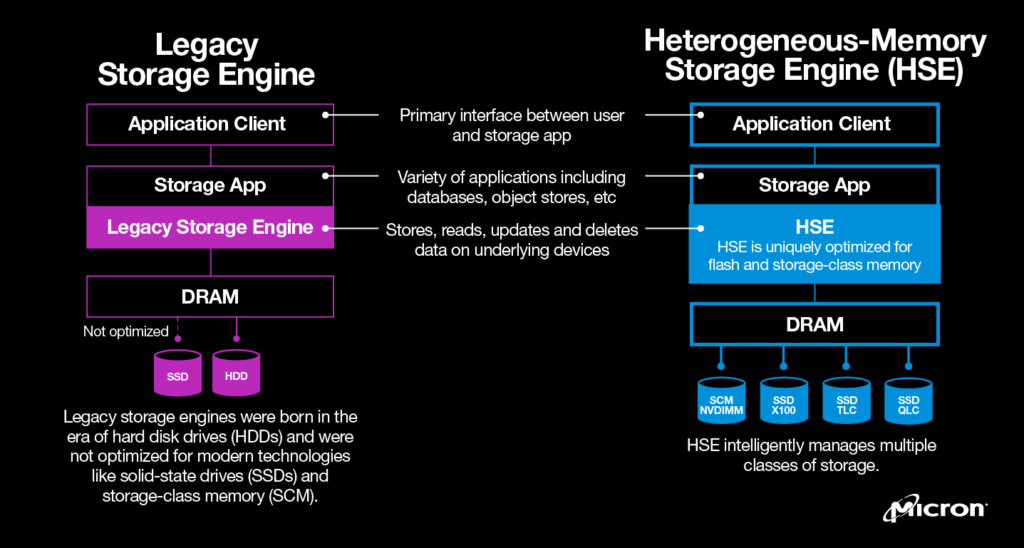

Heterogeneous-memory storage engine

Micron has developed an open-source heterogeneous-memory storage engine (HSE) designed to maximize the capabilities of new storage technologies by intelligently and seamlessly managing data among multiple storage classes. HSE is designed to overcome HDD-based architecture limitations and improve throughput up to six times, latency up to 11 times, and SSD endurance by seven times when compared to alternative solutions. Most storage engines, and therefore most storage applications, were written for hard drives and never optimized for SSDs, flash-based technologies or storage class memory. HSE offers a way to maximize the performance of those legacy applications.

HSE is designed with massive-scale databases in mind, such as billions of key counts, terabytes of data, and thousands of concurrent operations. HSE transparently exploits multiple classes of media — from NAND flash to Micron’s 3D XPoint persistent memory technology.

In-memory database

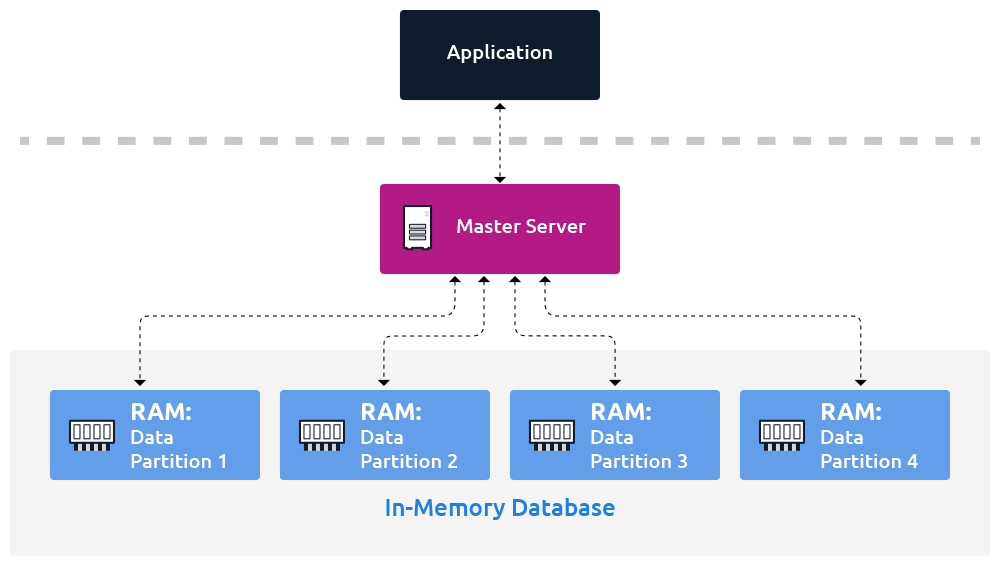

An in-memory database (IMDB) is a computer system that stores and retrieves data records that reside in a computer’s main memory, e.g., random-access memory (RAM). With data in RAM, IMDBs have a speed advantage over traditional disk-based databases that incur access delays since storage media like hard disk drives and solid-state drives (SSD) have significantly slower access times than RAM. This means that IMDBs are useful for when fast reads and writes of data are crucial.

The term IMDB is also used loosely to refer to any general-purpose technology that stores data in memory. For example, an in-memory data grid, which is a distributed system that can store and process data in memory to build high-speed applications, has provisions for IMDB capabilities.

In-memory caching systems are used to speed up access to slow databases (especially disk-based) are another example of IMDB, essentially emulating the performance of IMDBs. While these caches are not true IMDBs since they do not store data long term, they work in conjunction with disk-based databases as a (mostly) functional equivalent to IMDBs. The IMDBs temporarily store frequently-accessed data that reside in the underlying database to deliver that data faster to applications.

Near memory computing

Near memory, computing was one of the earliest architectures aimed at reducing latency and improving throughput in computing systems. It is still widely used today. Near memory, computing is based on heterogeneous system-in-package (SiP) products that are highly integrated semiconductors combining FPGAs or processors with various advanced components, all within a single package. FPGA-based SiP products address next-generation platforms, which are increasingly requiring higher bandwidth, increased flexibility, and increased functionality, all while lowering power profiles and footprint requirements.

An FPGA-based SiP approach provides many system-level advantages compared to conventional integration schemes. At the core of Intel’s SiP products is a monolithic FPGA, which provides users the ability to customize and differentiate their end system to meet their system requirements. Additional system-level benefits include:

- Higher Bandwidth: SiP integration using EMIB, enables the highest interconnect density between FPGA and the companion die. This arrangement results in high bandwidth connectivity between the SiP components.

- Lower Power: Companion die (such as memory) are placed as close as possible to the FPGA. The interconnect traces between the FPGA and the companion die are thus very short and do not need as much power to drive them, resulting in lower overall power and optimum performance/watt.

- Smaller Footprint: The ability to heterogeneously integrate components in a single package results in smaller form factors and fewer circuit board layers.

- Increased Functionality: SiP helps reduce routing complexity at the PCB level because the components are already integrated within the package.

- Mixed Process Nodes: SiP enhances the ability to incorporate different die geometries and silicon technologies.

- Faster Time to Market: SiP enables faster time to market by integrating already proven technology and reusing common devices or tiles across product variants.

That concludes this three-part FAQ series on memory technologies and applications. You may also enjoy reading part one, “Memory Basics – Volatile, Non-volatile and Persistent,” and part two, “Memory Technologies and Packaging Options.”

References

Heterogeneous-Memory Storage Engine, Micron

In-Memory Database, hazelcast

In-Memory Processing, Wikipedia

In-Memory Computing, Kurzweil

Near-Memory Computing, Intel

Leave a Reply