By Dustin Seetoo, Director of Product Marketing, Premio Inc.

Deep learning for autonomous vehicle systems requires a blended software and hardware strategy

In-step deep learning training and inference

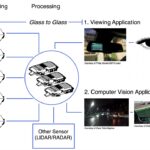

Deep learning training and deep learning inference are very different processes, each with a specific role in developing more advanced autonomous driving systems. Deep learning training taps into data sets to teach the deep neural network to carry out an automated task. Image or voice recognition are examples. Deep learning inference fuels the same network with new data, empowering the system to predict its meaning based on its training. These data-intensive compute operations need exceptionally rugged, high-performance solutions optimized for both edge and cloud processing, capable of latency-free, real-time inference analysis or detection. Systems must be ‘automotive capable,’ for example, incorporating an extensive range of high-speed solid-state data storage and hardened for performance in environments defined by constant movement, shock, and vibration.

Purpose-built edge computing devices execute inference analysis in real-time for instantaneous decision making…think autonomous vehicle moving at 60MPH, traveling more than 100 feet without guidance in only a few seconds. These industrial-grade AI inference computers accept various power input types and are designed to withstand challenging in-vehicle deployments. Systems are ruggedized for unique environmental challenges, such as exposure to extreme temperatures, impact, and contaminants like dust and dirt. Paired with high performance, these characteristics eliminate many issues resulting from cloud processing of deep learning inference algorithms. Integrated GPUs and m.2 accelerators enable and parallelize the required linear algebraic computations, better suited to AI inference processes than CPUs. Inference analysis is faster while the CPU is freed to run the operating system and other applications.

Rugged, connected performance enhances autonomous systems

Local inference processing further solves the latency and network bandwidth issues with raw data transmission, specifically, those resulting from large video feeds. Many wired and wireless connectivity options – technologies such as 10 Gigabit Ethernet, Gigabit Ethernet, Wi-Fi 6, and Cellular 4G LTE – ensure the seamless internet performance that protects over-air updates and cloud offload of mission-critical data. 5G wireless is poised to increase these options further. Integrated CANBus support enables logging of vehicle data from vehicle networks and buses. Rich information such as vehicle and wheel speed, engine RPMs, steering angles, and more can be evaluated in real-time to add tremendous value to AI-based systems.

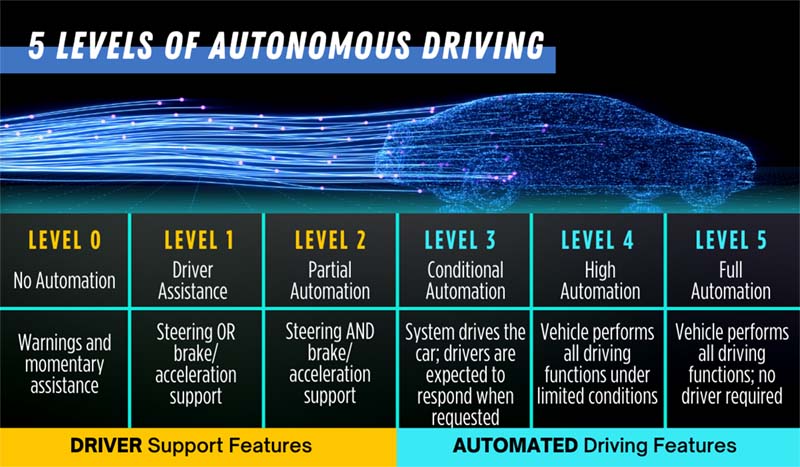

Autonomous driving and driver-assist systems are becoming more sophisticated, creating a competitive, fast-moving design landscape. For ADAS developers, improved AI algorithms are only one component of system design. Effective data handling is critical to deep learning and inference processes, ideally pairing software development and specialized hardware strategies for greater autonomous performance and smarter, safer designs.

About the author

Dustin Seetoo crafts Premio’s technical product marketing initiatives for industries focused on the hardware engineering, manufacturing, and deployment of industrial Internet of Things (IIoT) devices and x86 embedded and edge computing solutions. Connect with Dustin via LinkedIn or dustin.seetoo@premioinc.com.

Leave a Reply