What has the tiny microcontroller got to do with the mighty artificial intelligence (AI) world and its technology offshoots like machine learning and deep learning? After all, the AI designs have mostly been associated with powerful CPUs, GPUs, and FPGAs.

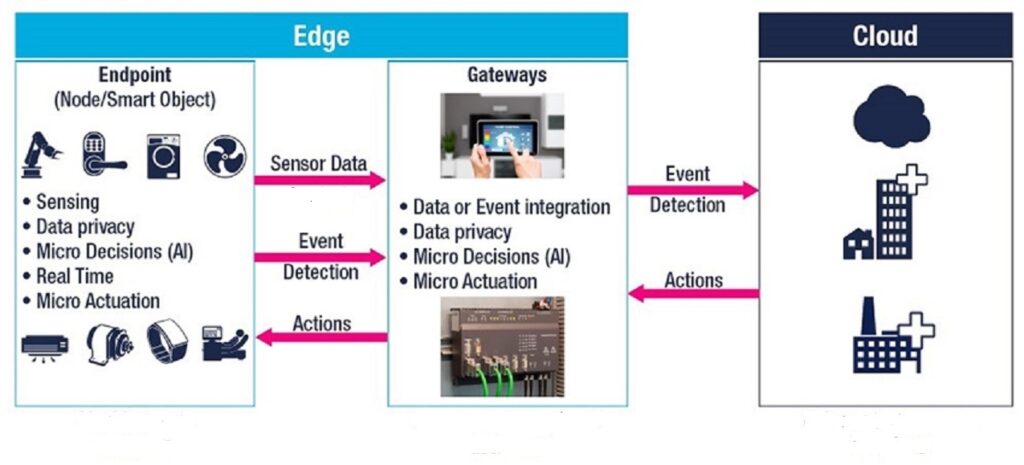

Right? While this notion is not far from reality, it’s quickly changing with AI’s journey from the cloud to the edge, where AI compute engines allow MCUs to push the boundaries of what’s possible for embedded applications. That, first and foremost, enables embedded designs to improve real-time responsiveness and device security from cyberattacks.

The MCUs equipped with AI algorithms are taking on applications that encompass functions such as object recognition, voice-enabled services, and natural language processing. They also facilitate greater accuracy and data privacy for battery-operated devices in the Internet of Things (IoT), wearable, and medical applications.

So, how do MCUs implement the AI functions in edge and node designs? Below is a sneak peek into three fundamental approaches that enable MCUs to perform AI acceleration at the IoT network edge.

Three MCU + AI venues

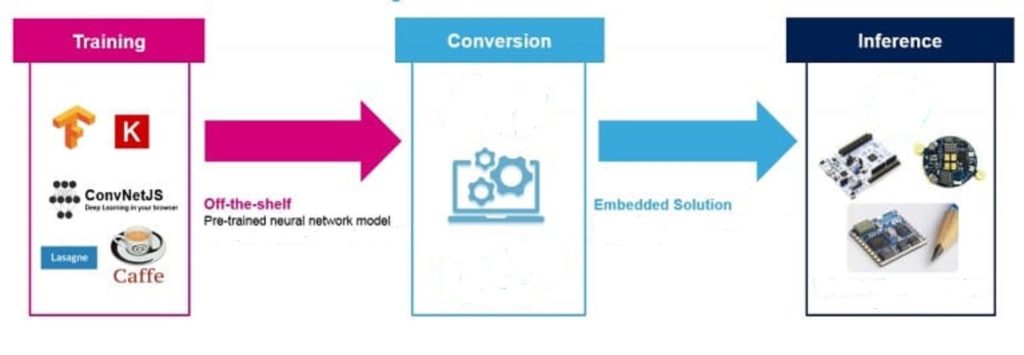

The first, and probably the most common approach, relates to model conversions for a range of neural network (NN) frameworks such as Caffe 2, TensorFlow Lite, and Arm NN for deploying cloud-trained models and inference engines on MCUs. There are software tools that take pre-trained neural networks from the cloud and optimize them for MCUs by converting them into C-code.

The optimized code running on MCUs can perform AI functions in voice, vision, and anomaly detection applications. Engineers can download these tools into MCU configuration and run inferences of the optimized neural networks. These AI toolsets also offer code examples for the neural network-based AI applications.

The second approach bypasses the need for pre-trained neural-network models borrowed from the cloud. Designers can integrate AI libraries into microcontrollers and incorporate local AI training and analysis capabilities in their code.

Subsequently, developers can create data models based on signals acquired from sensors, microphones, and other embedded devices at the edge and run applications such as predictive maintenance and pattern recognition.

Third, the availability of AI-dedicated co-processors is allowing MCU suppliers to accelerate the deployment of machine learning functions. The co-processors such as Arm Cortex-M33 leverage popular APIs like CMSIS-DSP to simplify code portability, allowing MCUs tightly coupled with co-processors to accelerate the AI functions like co-relation and matrix operations.

The above software and hardware platforms demonstrate how to implement AI functionality in low-cost MCUs via inference engines developed according to embedded design requirements. That’s crucial because the AI-enabled MCUs may well be on their way to transform the embedded device designs in IoT, industrial, smart building, and medical applications.

Leave a Reply