Electronics and software tend to be more important than the mechanics of autonomous vehicles. This shift has serious implications for how vehicles are designed.

Puneet Sinha | Mechanical Analysis Div., Mentor, a Siemens business

Though certain autonomous functions, especially driver-assist and active-safety features, have started to show up in some recent-model vehicles, the goal of fully self-driving cars continues to be a challenge. We are at the point where electronics and software tend to be more important than the mechanics. This shift has serious implications for how vehicles are designed. Safety-critical, sense-think-act functions require new development and engineering methodologies.

During the past two decades, digitization has greatly changed the automotive industry. Today’s vehicles include more than 150 electronic control units (ECUs) with accompanying software, good for one-third or more of their total cost. The industry is on course to having electronics account for about 50% of vehicle cost by 2030. Software content in cars is expected to triple by 2030. This growth dramatically boosts the number of requirements to be validated. Consequently, the practice of front-loading design decisions via digital solutions–once done simply to minimize costs—now has become indispensable for coping with growing development complexity.

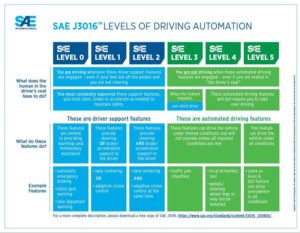

Autonomy Level 2 and Level 3 functions comprising advanced driver-assistance system (ADAS) operations such as pedestrian detection, adaptive cruise control, collision avoidance, lane correction and automated parking have already made their way into ordinary vehicles. Fully autonomous cars (SAE Level 4/5) are being tested on roads. Once the right conditions exist, the revolution can begin.

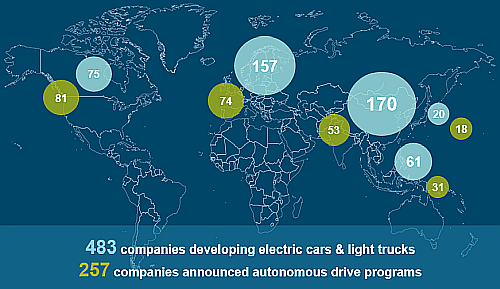

One of the biggest impediments at this point is that governments must work out legislation and infrastructure. In addition, consumers must overcome their fear of relinquishing control. Putting your safety in the hands of a machine driving 70 MPH requires safety guarantees that will give consumers peace of mind. Researchers are still somewhat careful about making precise predictions because of such uncertainties, but many believe autonomous vehicles will represent a significant proportion of the market by 2030 (up to 15% according to McKinsey).

Autonomy challenges

Vehicles capable of working at autonomy levels 4 and 5 must be capable of observing the environment, interpreting it, making decisions quickly and acting accordingly. However, the self-driving car will have to do much better at making decisions than the best driver because every incident and accident will be closely monitored. One bad outcome could be extremely harmful for the manufacturer or even the entire industry, causing serious delays in market introduction.

The transfer of control also shifts responsibility from the driver to the vehicle OEM or other stakeholders in the vehicle lifecycle management process. This shift has implications, for example, in terms of insurance when there is an incident. So OEMs must be able to certify vehicle performance in all possible scenarios, as during inclement weather and poor road conditions. Theoretically, this certification necessitates billions of testing miles. Thus virtual testing, verification and validation will probably be the costliest item during the autonomous vehicle engineering process.

Self-driving functions always combine capabilities that fit into one of the three segments of the sense-think-act model. Success only comes from systematically combining specializations in these disciplines. There will need to be more collaboration than ever among OEM departments, but also between OEMs and suppliers.

Sense: Before it can make proper decisions, the autonomous vehicle needs a robust 360° view in all weather and traffic conditions. So sensors and the way they integrate into the vehicle are important. All sensors have their strengths and weaknesses, and the industry has concluded that no single kind of sensor can handle the job on its own. Thus autonomous vehicles will require a combination of lidars, radars, cameras and ultrasonic sensors.

Problems can manifest themselves at various stages ranging from chip design, electronic design, structure and attachment points to integration with the vehicle. Vehicle integration can involve incorporation of active cooling or dealing with moisture, fog or other issues. Having a digital thread makes design and integration more efficient as any changes can immediately propagate to all levels. Having a workflow that integrates the simulation of sensor electronics with CAD, thermal, and electromagnetics simulations lets sensor vendors both meet size and cost metrics without sacrificing performance, and account for the complexities of specific vehicle locations.

Sensor vendors and OEMs must also ensure sensors will continue operating reliably for the lifespan of the vehicle. This task is particularly challenging because several new sensor technologies and startups are becoming a major part of the supply chain. It’s difficult for sensor makers to answer the reliability question on their own. They do not always work directly with the OEMs during development, so they cannot just mount the sensors in the vehicles and test them. But an integrated workflow—including electronics, thermal and dynamic structural simulation—can allow both OEMs and sensor makers to front-load lifetime predictions.

Additionally, we must ensure sensors perform well in all weather and traffic conditions. Reliance only on testing is not practical or even feasible: By many estimates, it will take more than eight billion miles of testing, including safety-critical edge cases, to ensure this reliability. Thus it will take physics-based simulation to verify sensor performance while also accounting for inclement weather and traffic scenarios.

Think: The data from the different vision and non-vision sensors must be combined for use in making decisions and triggering actuators. This combination process is critical and must meet the highest safety and security standards. Further, it must happen in real time. The latter is only possible with the support of built-in intelligence, enabling the vehicle to quickly recognize all possible scenarios. Crucial to the success of AV designs are both the training and validation of machine learning algorithms and low latency data fusion.

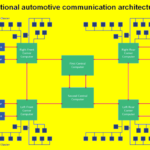

Currently, ADAS setups generally use a distributed computation architecture that carries out data processing at each node or sensor type. This distributed computation architecture has important disadvantages. They include unacceptable system latency in the transfer of safety-critical information, the loss of potentially useful data at the edge nodes, and a rapid boost in cost and power consumption as systems become more complex.

But a centralized raw data-fusion platform can eliminate the inherent limitations of these distributed architectures. This approach connects raw sensor data to a central automated driving compute module over high-speed communication lines. The compute module then fuses this data in real time. The high-speed, low-latency communication framework makes all sensor data, raw and processed, available across the entire system. Data processing takes place when required only in the region of interest. Doing so dramatically reduces the CPU load and fully supports autonomous driving functions while consuming less than 100 W of power.

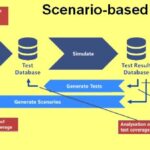

Machine learning and validation: The scarcity of training data and validation of machine learning algorithms challenge every auto OEM and technology supplier doing AV work. It’s not practical to rely on real-world testing as estimates are that billions of training miles will be necessary to fully allow safe autonomous driving. To date, machine learning is mainly applied with cameras. The emerging challenge is to use it with non-vision sensors. Additionally, the current process for object classification is manual; it is inefficient and not scalable.

The goal is to accelerate machine learning with a combination of real-life data and synthetic data. Users can generate synthetic training data from world modeling and simulation of cameras, lidars and radars. In addition, data captured during real-world testing can be seamlessly converted to scenarios to automate the process of object classification.

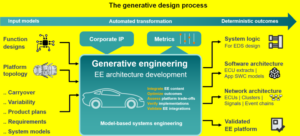

Act: Ultimately, an autonomous vehicle must act upon decisions. These actions involve technologies in three areas: the E/E architecture, ECUs with embedded software, and control algorithms. Vehicle autonomy is forcing an explosion in the complexity of E/E architecture. Even in today’s (Level 1/2) luxury cars, one can already find six to eight sensors, over 150 ECUs, and more than 5,000 m of electrical cable. Operation at autonomy Level 4 or 5 will drastically boost development complexity, raising new challenges.

E/E system complexity prevents defining the E/E architecture manually and treating autonomous functions individually. Instead, designers must take a model-based approach that delivers deterministic outcomes from the start. Companies can start from a deterministic overarching multi-domain base model. This model will be refined step-by-step and covers all components including electrical wiring, software, hardware and networks. This approach lets engineering departments choose between design options and virtually evaluate their implications for all domains. Designers can deliver the optimized architecture faster and with more confidence through performance balancing, trade-off analysis, and verification and validation during early development stages.

In the control algorithm category, there are new challenges for autonomous vehicles. The traditional way of constructing a control algorithm is to monitor conditions and take action based on the state of the input variables. But this sort of reactive control will no longer be sufficient. Control algorithms for autonomous vehicles must anticipate conditions, accounting not just for vehicle dynamics but also planning trajectories that include what-if scenarios for the environment and traffic around the vehicle. For example, algorithms for automated valet parking must read sensor inputs and include tire dynamics in trajectory planning, which is critical for low-speed maneuvers.

By 2030, it’s anticipated that a significant share of vehicles will be fully autonomous. The fact that software will gain importance over mechanics, and self-driving cars will have to completely replace the driver, will have huge implications on many aspects of automotive development.

Automakers and their suppliers will have to revolutionize the processes used to deliver products. They will need to allow for constant upgrades and performance improvements, safety-related updates, and more. Such measures are only acheived with product lifecycle management and traceability.

All in all, the sense-think-act model demands expertise in a range of diverse domains. It will be important to work with partners having know-how spanning the entire range of applications.

Leave a Reply