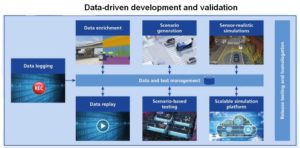

Data replay and enrichment tools help analyze driving scenarios and verify simulations based on real-world events.

Jace Allen, ADAS/AD Engineering and Business Development, dSPACE Inc.

For ADAS and highly automated driving (HAD) applications, the road to homologation–the process of showing a product meets regulatory standards and specifications — is a long journey that begins and ends with the data. In fact, it’s all about the data.

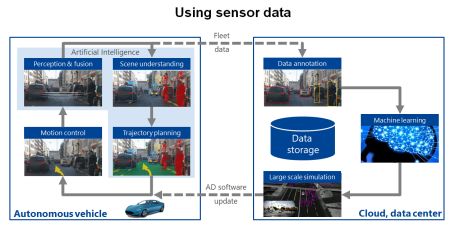

The moment the vehicle’s perception sensors capture data, a complex process begins. The data must be taken through an intensive series of checks and balances to validate standards and specifications, including safety and technical requirements. This process requires a powerful and flexible end-to-end, data-driven development tools.

The first step toward homologation begins with data logging within the actual vehicle. Data logging allows engineers to evaluate the development, validation, and optimization of artificial intelligence (AI) algorithms and electronic controls for autonomous functions. Specifically, data logging supports the following activities:

- Data enrichment to train AI/machine learning algorithms

- Prototyping of sensor fusion and perception algorithms in the vehicle

- Playback of recorded data in the laboratory to simulate test drives and support large-scale simulation

- Real-time trajectory planning to determine the best position, velocity, acceleration, etc., of the vehicle in a driving scenario

- Motion control development for optimal control of the autonomous vehicle in reaction to changing parameters and system inputs

Autonomous prototype vehicles generate huge amounts of data (petabytes) from their numerous imaging sensors (lidar, cameras, radar, ultrasonic). Buses and networks (CAN, CAN FD, Ethernet, FlexRay, etc.) get this data where it needs to go. V2X interfaces such as DSRC (Dedicated Short-Range Communications) or 4G or 5G, as well as GPS or electronic horizon capabilities with HD maps are all typically part of the data logging system.

The system must support multiple interfaces, and it must support time synchronization across all the interfaces to ensure logged data is precisely time-stamped for synchronized replay. Additionally, the system must be scalable and provide sufficient bandwidth and processing power to record the vast amounts of incoming data from the perception sensors. This necessitates a high-end storage system with several terabytes of space. One such system is the dSPACE Autera System which puts the power of a Linux server into the vehicle.

Once data becomes available, either through collection from the vehicle’s sensors or imported from other sources, it must be enriched to convert it into a usable form. Data enrichment involves annotating, segmenting, and adding more details to the data. Data enrichment plays a fundamental role in the training of AI algorithms and neural networks. It is critical for identifying the targets for AV systems but can also be used for extracting realistic driving scenarios built from real-world data based on ground truth.

In 2019, dSPACE acquired understand.ai (UAI), a firm that provides training and validation data used to develop computer vision and machine-learning models for autonomous vehicles . UAI also offers one of the world’s fastest point-cloud rendering tools. (Point clouds resemble solid meshes but are simpler and faster to generate and usually have a higher resolution compared to a solid mesh of the same size.)

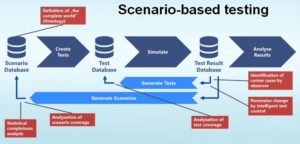

With vehicle perception sensors generating petabytes of data, one might wonder how all that data gets converted to the right number of scenarios necessary for testing. A second question is how to ensure these scenarios are logical and represent real world data.

The appropriate level of realism and complexity can only come from running large-scale simulations. These simulations require the reproduction of numerous scenarios that include those involving critical traffic situations and edge cases.

Simulated scenarios are created manually or automatically from the measurement data extracted from perception sensors, object lists, positioning data, and maps. Advanced physics-based simulation models representative of different real-world scenarios (i.e. roads, 3D scenery, dynamic traffic, etc.), are built using the data to validate ADAS/AD functions. These scenarios can be played out in software-in-the-loop (SIL), hardware-in-the-loop (HIL) or cloud-based platforms.

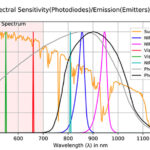

There are several requirements for devising models that are realistic enough for use in AV simulations. One is that the objects in the model have material properties so they behave the same way as the physical assets they represent.

Models of camera sensors should also provide high-fidelity images and should be able to modify images for lighting effects (i.e. glares, flashes, reflections, etc.), distortion, vignetting, and chromatic aberration. Models of radar sensors should be able to calculate polarimetric measurements. (Radars often use wave polarization in post-processing to improve the characterization of the targets.

Polarimetry can estimate the texture of a material, help resolve the orientation of small structures in the target, and resolve the number of bounces of the received signal.) Radar models also manage specular (mirror-like) reflections, diffuse scattering (spread over a wide range), and multipath propagation.

Lidar sensor models should provide full support for scanning and flash-based sensors (where each laser pulse illuminates a large area and a focal plane array simultaneously detects light from adjacent directions), including rolling shutter effects (where use of a rolling shutter to record the image scan-line by scan-line distorts the image), and weather conditions such as rain. Additionally, simulated scenarios should be capable of integrating independently simulated processes for traffic flow, driving, or other aspects of vehicle operation.

To get to the simulation and validation results for homologation, the simulated environment must be able to run across multiple platforms (SIL, HIL, cloud). One tool for setting up simulation models is called Automotive Simulation Models (ASM). Available from dSpace, the tool consists of open Simulink models that can be used to build full vehicle dynamic and driving scenario simulations. Also available is ModelDesk, an interactive, graphical editor. Such tools permit driving scenarios or traffic scenes to be simulated with an unlimited number of traffic objects and an unlimited number of sensors.

Date replay as a test strategy

Data replay offers an excellent way to analyze driving scenarios and verify simulations based on real-world data. This can be done with HIL systems such as ScaleXIO as well as with data logging systems that offer a playback mechanism and software to control the synchronized playback (dSPACE Autera with RTMaps is an example). Captured data can be replayed in real time or at a slower rate to manipulate and/or monitor the streamed data.

An open, end-to-end simulation ecosystem can run scenarios through simulations via a closed-loop process. The vehicle and algorithms are in the loop, adding control to the simulation process and closing the performance loop. Such a system can be fully automated and used for regression testing with all the advantages of HIL and SIL testing. The system should support standards such as XIL-API, OSI, FMI, OpenScenario, OpenDrive, as well as virtual bus simulation for different buses (i.e. CAN, CAN-FD, Ethernet).

Simulations inject data into the system under test (SUT)–such as the AV software stack or the ECU–to analyze the behavior of the software, system components, sub-systems. Injected data can take the form of real sensor inputs, object lists, or a rest bus simulation (simulating more than one ECU bus connection or node to represent communications bus loads).

With ground truth sensor simulation (i.e. how sensors and on-board systems interact with the virtual world and with calculated surfaces and objects), an object list can simply be inserted into the SUT to test algorithms such as path planning. But for a broader scope of testing, either raw data or target lists must be injected into the simulation so the ECU can perform the data fusion and object detection/tracking. Additionally, probabilistic events can be played out to look at different permutations or functional qualities built into the simulation. This stochastic approach to testing is built into the ground-truth sensor models of the ASM tool suite but can also be applied to the different parameters that are associated with any closed-loop scenario test (vehicle, sensor, software, and environment variables).

For maximum simulation performance, ground truth sensors can be used in the simulation – in particular for trajectory path planning and decision making. This type of simulated environment can exercise the algorithms running on specific systems detection of lane markings and boundaries, fellow vehicles, traffic signs, traffic lights, etc.

An open, end-to-end simulation can also be fully scalable so simulations and tests can run in the cloud or on High-performance Clusters (HPCs), using advanced orchestration (the automated configuration, management, and coordination of computer systems, applications, and services), technology such as Docker on Linux (an open-source project making it easy to create containers and container-based apps), and Kubernetes (an open-source container-orchestration system for automating application deployment, now maintained by the Cloud Native Computing Foundation).

In a nutshell, homologation requires a well-orchestrated test system providing the highest level of safety. Bringing everything together into a seamless tool chain helps smooth the process.

Leave a Reply