The special demands of sensors needed for autonomous driving have brought suggestions for different measurement metrics.

Leland Teschler, Executive Editor

A few months ago, sensor maker AEye made a media splash when it claimed it had developed a super-speedy LiDAR sensor that could hit a scan rate of 100 Hz or more. That speed outpaces by about a factor of 10 what ordinary LiDAR sensors can produce. So you might think AEye would do a lot of crowing about its new system.

But actually, AEye says scan rates aren’t a particularly good measure of LiDAR performance, or at least not as good as other metrics, when the application area is autonomous vehicles. The crux of AEye’s argument about metrics is that traditional measures generally sized up factors that were strictly determined by the optical hardware, control electronics, and any motion-control mechanics involved. Typical examples of these measures include frame rate, resolution, and range.

The problem, says AEye, is that these factors don’t tell much about how a LiDAR system in an autonomous vehicle behaves. The reason is that LiDAR systems in vehicles actually take on tasks involved in not just sensing but also object recognition. So measurements that just look at the hardware and the electronics miss a big part of what has come to be expected from vehicular LiDAR systems.

Instead, AEye suggests borrowing a few ideas from advanced robotic vision research. Specifically, they say robotic work around object identification that connects search, acquisition, and action points the way toward better automotive LiDAR metrics. Here, search is the ability to detect objects without missing any of them. Acquisition is the process of taking search results and classifying the objects in the search. Action refers to things the sensor does (not things the car does) in response to what it perceives. Typical sensor actions might be to scan for new objects, get more info about a specific object, track an object that seems to be non-threatening, and tell the control system to take evasive action.

However, those familiar with the use of LiDAR in autonomous vehicle schemes might argue that AEye’s new metrics are a bit self-serving: AEye makes a LiDAR system called iDAR which looks pretty good when evaluated with the metrics that AEye proposes. Nevertheless, the reasoning behind new LiDAR metrics is that the most important qualities of these systems can’t be judged by the metrics of the LiDAR sensor alone, a point upon which few would disagree.

AEye says a key factor missing from the conventional frame rate metric is a fine definition of time, because the time it takes to detect an object depends on multiple variables besides the scan rate which include object shape, distance, reflectivity, and several others.

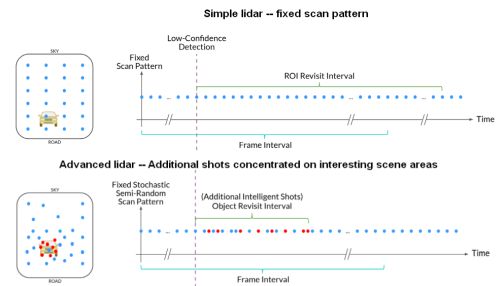

Instead, the company proposes an object revisit rate (ORR) metric. This is basically a measure of how fast the system can take a second look at an object it has decided is “interesting.” The rationale for ORR is that advanced recognition algorithms that see multiple moving objects can get confused if a lot of time elapses between scene samples. Increasingly the practice is to schedule repeated shots at interesting objects in quick succession, fewer at things that aren’t as important.

In simple systems, the LiDAR frame rate is the same as the ORR. But AEye says in more advanced setups that can change what they look at dynamically, some parts of the scene can be examined 1,000x faster than what’s possible with passive sensors.

In the same vein, AEye says object detection range is an obsolete metric for LiDAR systems. What matters most is not how quickly an object be detected, but how quickly a system can ID it, make a threat-level decision, and decide on an appropriate response. Currently, the perception software stack usually classifies and IDs incoming object data, which then gets used to predict trajectories.

AEye argues that a metric called object classification range (OCR) is a better way to measure things because it reduces unknowns such as latency from noise suppression early in the perception stack, pinpointing salient information.

All in all, AEye points out that conventional LiDAR sensors passively search with scan patterns that are fixed in both time and space. But intelligent sensors are increasingly moving to active search and identification of classification attributes of objects in real time. As perception technology and sensor systems evolve, so too should the metrics used to measure their capabilities.

Leave a Reply