Semiconductor makers now field sensor equipment complete with built-in safety systems that specifically target autonomous driving applications.

LANCE WILLIAMS | ON Semiconductor

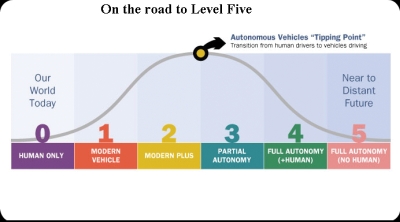

Increasingly sophisticated advanced driver assistance systems (ADAS) are helping cars move up through defined levels of autonomy toward the day when fully autonomous driving is a reality. ADAS is an automotive megatrend that will enable the biggest change in how the world’s population gets from A to B since the inception of commercial flight.

In the automotive sector, almost all the discussion is about the fully autonomous or driverless vehicle. Safety is the key driver in the march to make vehicles more autonomous. WHO 2017 figures show 1.25 million people die in road traffic accidents each year and a further 20 to 50 million people are injured or disabled. The cause of these road traffic accidents is overwhelmingly human error. The U.S. Dept. of Transportation has found that 94% of the two million accidents happening annually were caused by driver mistakes.

Research began in the 1980s and today there are about 50 companies actively developing autonomous vehicles, as well as numerous university projects pushing boundaries. Autonomous emergency braking, lane departure warning systems, active cruise control, as well as blind spot monitoring are common features on vehicles produced today, and automated parking is proliferating at pace. These systems provide valuable input to the driver, who ultimately remains in control, for now.

As ADAS evolves, the industry is reaching a tipping point where the vehicle itself is providing integrated monitoring. Companies like ON Semiconductor are addressing the trend through both hardware and software innovation and support for other activities in ADAS.

Sensor fusion is the key to passing this tipping point. Diverse systems in the vehicle are becoming linked, boosting the ability to make more complex, safety-critical, decisions and providing a redundancy that will help prevent errors that could lead to accidents.

SENSOR FUSION

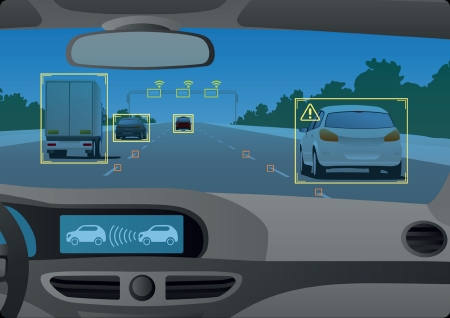

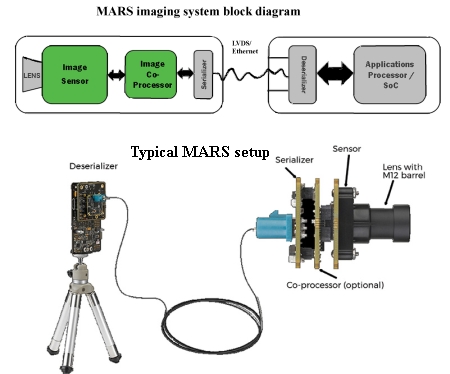

Vision is an increasingly important facet of vehicle technology. Vision sensors now support active safety features that include everything from rear-view cameras to forward-looking and in-cabin ADAS. Engineers combine and process the data from multiple types of sensors to ensure correct decisions, responses and adjustments. This approach may include combinations of image sensors, radar, ultrasound and lidar.

There is a safety standard called ISO 26262 that applies to ADAS. ISO 26262 covers the functional safety of vehicle electronic systems. It is the vehicular-specific extension of a broader safety standard called ISO 61508. Functional safety focuses primarily on risks arising from random hardware faults as well as systematic faults in system design, hardware, software development, in production, and so forth.

Under this standard, manufacturers identify hazards for each system, then determine a safety goal for each of them. Each safety goal is then classified according to one of four possible safety classes, called Automotive Safety Integrity Levels (ASIL). Vehicle safety integrity levels cover the range ASIL-A (lowest), to ASIL-D (highest). An ASIL level is determined by three factors: severity of a failure, the probability of a failure happening, and the ability for the effect of the failure to be controlled.

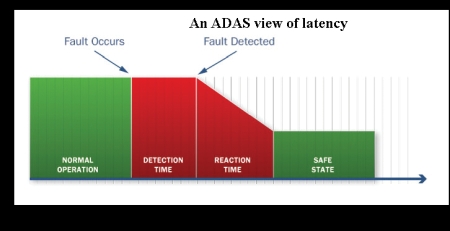

Functional safety starts at the sensor. Issues such as latency, and high-speed fault detection are given close attention by automotive OEMs, Tier-Ones and sensor makers alike. There can be catastrophic implications if faults in image sensors used for ADAS go undetected, especially for systems such as adaptive cruise control, collision avoidance and pedestrian detection.

The process of detecting one of the thousands of potential failure modes is processor-intensive, requiring an algorithm for each fault. In fact, some faults are impossible to detect at the system level. Latency within fault-tolerant systems is a primary concern for all system designers – simply put, this is the time between when a fault occurs and when the system returns to a safe state. For safety, the fault must be detected and addressed before it leads to a dangerous event.

Vision sensors are becoming more advanced, and functional safety fault detection is moving from the ADAS to the sensor itself. Detection is built-in and faults are identified by design. The benefit of sensor-based detection is better fault detection as well as less pressure on the ADAS processing capacity.

Even today, many ADAS struggle to meet ASIL-B compliance. In the near-term, the number of systems required to meet ASIL-B compliance will rise dramatically. Future ADAS will need to meet ASIL-C and ASIL-D compliance if widespread use is to become reality. ON Semiconductor is already active in this area. It has equipped many of its image sensors with built-in sophisticated safety mechanisms to ensure complete functional safety.

Driven by the stringent demands of automotive operating environments, image sensors for ADAS are adding features such as light flicker mitigation (LFM) – which overcomes issues of misinterpretation of scenes caused by front or rear LED lighting in the sensor’s field of view, superior infrared performance, and the ability to work in either extremely bright or low-light conditions.

All in all, advanced and functionally safe sensors will be at the heart of ADAS. The fusing of these sensors with other in-vehicle technology and ensured cyber security will move the industry past the tipping point where vehicles can become truly autonomous.

Leave a Reply