Your car will know your face and your voice

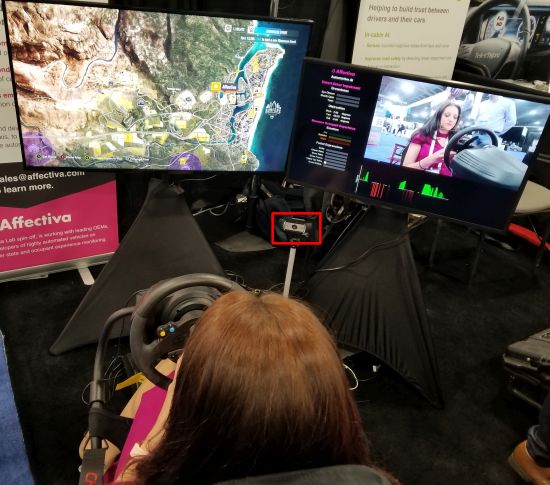

Affectiva demonstrated its automotive AI system that basically can tell whether a driver is sleepy, upset, or otherwise cognitively impaired, based on an analysis of facial expressions and voice qualities. The image of the driver comes from the camera, circled in red for clarity. The system analyzes facial and voice expressions and does so locally, so there’s no worries about beaming personal images up to the cloud. The system employs deep neural networks to analyze the face at a pixel level to classify facial expressions and emotions. Also analyzed are acoustic-prosodic features such as tone, tempo, loudness, pause patterns, and so forth. The deep learning network uses the analysis to predict the likelihood of an emotion. Affectiva has collected 6.5 million face videos in 87 countries as base data. To integrate this system into a vehicle, developers use the Affectiva C++-based development kit that runs in real time on embedded ARM64 and Intel x86_64 CPUs.

Affectiva demonstrated its automotive AI system that basically can tell whether a driver is sleepy, upset, or otherwise cognitively impaired, based on an analysis of facial expressions and voice qualities. The image of the driver comes from the camera, circled in red for clarity. The system analyzes facial and voice expressions and does so locally, so there’s no worries about beaming personal images up to the cloud. The system employs deep neural networks to analyze the face at a pixel level to classify facial expressions and emotions. Also analyzed are acoustic-prosodic features such as tone, tempo, loudness, pause patterns, and so forth. The deep learning network uses the analysis to predict the likelihood of an emotion. Affectiva has collected 6.5 million face videos in 87 countries as base data. To integrate this system into a vehicle, developers use the Affectiva C++-based development kit that runs in real time on embedded ARM64 and Intel x86_64 CPUs.

NEXT PAGE: Big data pipes for vehicles

Leave a Reply