Simulation is the only way to get the kind of confidence necessary to field autonomous vehicle functions.

Karsten Krügel, Sven Flake, dSPACE GmbH

HOW do you ensure that an autonomous vehicle will perform safely when the driver’s responsibility has essentially been removed? The necessity for reliable operation under this scenario is raising major challenges within the engineering community on the best methods to validate autonomous driving functions.

In the absence of the driver, the autonomous vehicle takes over key tasks. It decides how to react in an open situation and how and when it must alert the driver to take back control of the car. The vehicle’s ability to recognize the environment and predict probable changes over the next few seconds is vital.

The autonomous vehicle not only must monitor open situations closely, it must also be predictive. It must be able to sense the environment as precisely as possible. In determining if a vehicle is performing as expected in these kinds of situations, a good place to begin is with modeling … not just of a single sensor with a single task, but of the entire vehicle and its surrounding environment. This is a key step to starting the validation process.

The number of tests necessary to validate the functions of an autonomous vehicle is massive. Thus, it’s unrealistic to try and accomplish all these tests in the field. A more common-sense approach is to perform virtual simulation testing in the lab. Virtual simulation testing can include model-in-the-loop (MIL), software-in-the-loop (SIL) and hardware-in-the-loop (HIL) methods, as well as test benches enhanced by simulation.

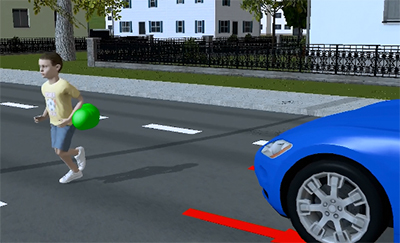

With simulation, a virtual test drive is created using models … models of the vehicle, the onboard sensors, the road, the surrounding traffic, the environment, traffic signs, pedestrians, intersections, GPS, etc. These models enable simulations to run in real time.

With the aid of off-the-shelf simulation models, you can get a jump start on building virtual test drive scenarios. As an example, the dSPACE automotive simulation model (ASM) tool suite supports numerous application areas such as combustion engines, vehicle dynamics, electric components, hybrid drivetrains, sensors, the traffic environment and road networks. Engineers can use it to model a whole virtual vehicle and its surrounding environment.

In this virtual world, the test vehicle can be equipped with multiple sensors for object detection and recognition. Autonomous vehicles carry numerous sensors that serve different purposes. Some are designed to sense the internal state of the car. Others–usually cameras, radar, Lidar and ultrasound– are designed to see the environment. In a process known as sensor fusion, the data captured and processed from various sensors are combined to create a more complete and accurate picture.

Aside from the sensors, connectivity also plays a key role in sensing the environment and should be included in the model. An understanding of how the vehicle communicates with other traffic participants (i.e. via sensor stimulation, over-the-air, GNSS, V2X, GPS, data in the cloud, etc.) helps to establish the electronic horizon.

Once a model scene is built, the data that is gathered from different types of sensors and connectivity activities can be integrated into the model to evaluate various driving scenarios or virtual test drives. These findings help to decide actions and determine how those actions should be carried out.

With a robust model in place, countless virtual test drive scenarios (i.e. oncoming traffic, stop and go, pedestrians) can be simulated with real-time controllers online, in a HIL environment, and offline, on a PC at your desk.

HIL testing

When it comes to testing the driving functions of an autonomous vehicle, developers needn’t wait for a finished vehicle prototype to begin testing. The preferred technique is to forgo the real world and get a jump start on testing in the virtual world via simulation.

The functions of an autonomous vehicle are under the control of electronic control units (ECUs). So in the absence of a prototype vehicle, it is still possible to begin early validation of autonomous driving functions by testing the real code in the ECUs.

The ECUs connect to an HIL system to enable a real-time simulation of the test drive scenario. A test automation tool (i.e. AutomationDesk) executes the simulation. A measurement and calibration tool (i.e. ControlDesk) performs diagnostics on ECUs. Measurement and calibration tools like ControlDesk are also used to visualize the human interface and custom layouts.

Additionally, a 3D online animation tool (i.e. MotionDesk) is recommended to visualize the overall test drive scenario. Finally, a real-time script should be run on the HIL system to track important events directly on the system. A test management tool (i.e. SYNECT) can manage all the test data.

Typical tests run in an HIL simulation environment include release tests for camera and radar applications, the testing of image processing ECUs, testing of camera-based systems, multisensory systems, V2X apps, GNSS-based driving functions, and tests of over-the-air radar-in-the-loop simulations.

Virtual validation

If only a few ECU prototypes are available or none at all are available,̶ it is still possible to move forward with testing autonomous driving functions using virtual validation.

With virtual validation, the behavior of a function (e.g. automatic parking) is reproduced using a mathematical model (preferably, these models should be Simulink-based, open and fully parametizeable). This makes simulation possible … the only difference is that the simulation takes place in an SIL test environment instead of an HIL environment.

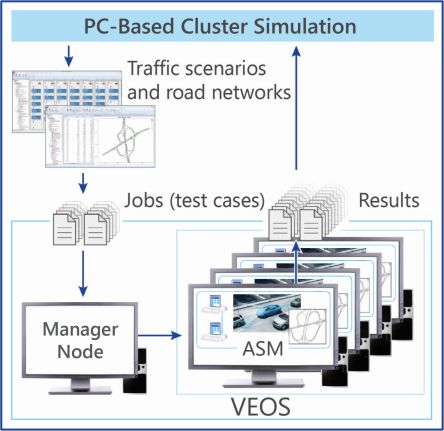

In the virtual validation test scenario, the HIL system is replaced with SIL PC-based simulation (i.e. VEOS). The same models that would run on a HIL system can also run on an SIL PC-based system, but virtual ECUs are connected instead of the real ECU.

Virtual ECUs are created from the software components that represent the ECU. They can be directly integrated into the SIL simulation test scenario. Simulink models and third-party models based on the Functional Mock-Up Interface (FMI) standard can also be integrated. For PC-based simulations, the ECU code that is generated should be compiled for a Microsoft Windows platform.

Transitioning back and forth from HIL to SIL PC-based simulation is easy because the same models can be shared, and the same tools can be used for measuring, test automation and model parameterization.

Reacting in an unpredictable world

The world is unpredictable, and unexpected situations will arise. Autonomous driving functions can’t be validated with only a set of pre-defined test drives. Thought must go into how to create test drives that reflect an unpredictable world. It’s also insufficient to just generate test drives randomly. Developers must also think about how to create good test situations and many of them.

For example, it will take several versions of test drives to develop a test case to determine how a vehicle will react when it meets traffic. Developers must consider how the vehicle will react when other participants follow traffic rules and what will happen when they don’t. Adding these kinds of varying details can quickly lead to an explosive number of test scenarios.

It takes a considerable number of test drives to evaluate the numerous autonomous driving function scenarios. Tests must take place both during the development phase and for the final release, so efficient, time-saving methods are extremely valuable. Organizations can be more resourceful and speed testing activities by utilizing existing test cases and performing PC cluster simulation.

Every test scenario to be simulated needn’t be created manually. Thousands of test cases can be imported using resources such as OpenDRIVE, OpenCRG, OpenSCENARIO, OpenStreetMap, Open Simulation Interface (OSI), Simulation of Urban Mobility (SUMO), VISSIM, HERE, TomTom, your own GPS data, etc. Accident databases (i.e. GIDAS) are another go-to source for information. These kinds of tools support the configuration of simulation models so they can realistically emulate defined scenarios during simulation.

As test cases begin to pile up, a great way to complete numerous simulation scenarios automatically is on a cluster of PC based-simulation computers (e.g. VEOS). With this setup, virtual test simulations can run in parallel. Additionally, test system setups can easily be scaled up or down as needed.

The PC cluster is typically controlled by one central unit that schedules the execution of test case simulations. The number of tests run via PC cluster simulation is equivalent to driving thousands of miles over the course of a day.

Whether using an SIL or an HIL environment, the testing of autonomous driving functions revolves around the interpretation of sensor fusion algorithms. Sensor and vehicle network data gets recorded and played back time-synchronously for testing purposes.

To support high-performance sensor data processing, the test environment should be equipped to capture, synchronize, process and merge data from various sensors, such as cameras, radars, Lidars and GNSS receivers. It should also handle multiple, high-bandwidth data streams, calculate application algorithms, interface with communication networks (i.e. CAN/CAN FD, LIN, Ethernet), and connect to actuators or human machine interfaces (HMIs). Data tagging programs, such as RTMaps, are available to provide time stamping, tagging, recording, synchronizing, and playback options to enable sensor data processing and ensure that all data is time-correlated.

The high number of tests necessary to validate autonomous driving functions makes data management and traceability a necessity. Test data should be centrally managed through a facility that ensures consistent data versions and full traceability. Data management tools are available to support test planning, execution, evaluation and reporting. dSPACE SYNECT is a data management and collaboration software tool with a special focus on model-based development and ECU testing.

Autonomous vehicles are made up of a set of connected ECUs with embedded software. For convenience, the software architecture should be configured to support software updates over the air. Currently, most software architectures for ECUs are designed to be parameterizable, but they are still static, ideally designed using the AUTOSAR Classic Platform. The software architecture needs to support software updates on the go without changing the overall architecture.

The recent AUTOSAR Adaptive Platform implements this idea of flexible embedded software architectures. Likewise, the ECU architecture will need to support powerful ECUs responsible for the intelligence of the car. Less powerful ECUs can be used for more basic functions.

Leave a Reply