Real-time kinematic positioning brings high-accuracy to the autonomous vehicle industry without breaking the bank.

James Fennelly • ACEINNA Inc.

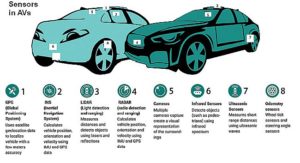

It’s no secret that a variety of technologies must work together smoothly and reliably to make autonomous vehicles possible. The technology mix includes inertial measurement units (IMUs), GPS/GNSS, radar, lidar, IR and vision sensors, as well as real-time kinematics (RTK) for reliable absolute position accuracy within inches. In particular, the challenge of precision navigation is considered so important that Darpa has active development efforts to improve navigation and determine exact location with limited or no GPS/GNSS coverage.

Precision navigation is the ability of autonomous vehicles to continuously know their absolute and relative position in 3D space, with high levels of high accuracy, repeatability, and confidence. For safe and efficient operation, precise position data must be quickly available, be unrestricted by geography and be economical.

Numerous industries require reliable methods of precise navigation, localization, and micro-positioning. For example, agriculture increasingly uses autonomous or semi-autonomous equipment in cultivating and harvesting the world’s food supply. The recent global pandemic exposed the importance of warehousing, freight and inventory management. The robotics in these industries are only as effective as their ability to accurately position and navigate themselves. Autonomous capability in long-haul and last mile delivery also requires precise navigation.

High-precision solutions currently are used in applications such as satellite navigation, commercial aircraft and submarines. However, this equipment comes at a premium ($100s of thousands) and is relatively large (loaf of bread sized).

However, recent developments in IMU technology have progressively reduced both size and cost. Hardware has evolved from bulky gimbal gyroscopes to fiber-optic gyroscopes (FOG) and tiny micro electromechanical systems (MEMS) sensors.

The success of precision navigation depends on developers and manufacturers bringing down cost and size without sacrificing IMU performance. The resulting technologies enable advances in the position reckoning of drones, agricultural robotics, self-driving cars, and smartphones.

Today’s limitations of GPS/GNSS

Atmospheric GPS interference and outdated GPS satellite orbital path data can be corrected to get a best-possible calculated position accuracy of around one meter. Multi-path errors in urban areas and poor GPS coverage in others boosts the receiver’s margin of error. This margin of error reduces road-level accuracy, i.e. a vehicle’s knowledge about which road it’s on. These difficulties highlight the reason for developing higher-precision navigation which will tell vehicles which lane they’re in rather than which road they’re on. Safe operation in a complex, dynamic environment with minimal human intervention requires lane-level or centimeter-level position accuracy.

Autonomous and semi-autonomous vehicles use a suite of perception sensors and navigation equipment to maneuver. The limitations of GPS and inertial navigation systems force vehicle systems to boost positioning accuracy via additional means.

For example, image and depth data from lidar and cameras, combined with high-definition maps having centimeter-level accuracy, can be used to calculate vehicle position in real time and with reference to known landmarks and static objects. HD maps contain massive amounts of data including road- and lane-level details and semantics, dynamic behavioral data, traffic data, and much more. Autonomous vehicles make maneuvering decisions by evaluating camera images and/or 3D point clouds cross-referenced to HD map data.

Though this method of maneuvering is effective, it has its challenges. HD maps are data intensive, expensive to generate at scale, and must be constantly updated. Moreover, perception sensors are prone to environmental interference, which compromises the quality of their data.

The rising number of automated test vehicles on the road generate larger data sets of real-world driving scenarios, and the predictive modeling for localization is becoming more robust. However, all this modeling demands expensive sensors, significant computational power, sophisticated algorithms, high operational maintenance, and terabytes of data collection and processing.

RTK improves localization

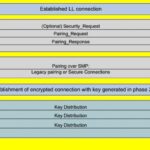

The incorporation of real-time kinematics (RTK) into the navigation scheme can boost the accuracy of localization techniques, that is, determining the vehicle’s precise position on the map . RTK is both a technique and a service that corrects errors and ambiguities in GPS/GNSS data to enable centimeter-level accuracy.

RTK works with a network of fixed base stations that transmits corrected location data wirelessly to moving vehicles. Each vehicle then integrates this data in its positioning engine to calculate a position in real time. Through RTK, navigation systems can realize accuracy up to 1 cm + 1 ppm of baseline length to the base station. Additionally, this accuracy is available without any additional sensor fusion.

More specifically, RTK measures the phase of the GPS carrier wave. It uses a single reference station or interpolated virtual station and a rover to provide real-time corrections and realize centimeter-level accuracies. The base station transmits correction data to the rover.

The range to a satellite is essentially calculated by multiplying the carrier wavelength by the number of whole cycles between the satellite and the rover and adding the phase difference. Errors arise because GPS signals may shift in phase by one or more cycles during their journey from the satellite to the receiver. This effect results in an error equal to the error in the estimated number of cycles multiplied by the wavelength, which is 19 cm for the L1 (the first) GPS signal. The error can be reduced with sophisticated statistical methods that compare the measurements from the coarse acquisition (C/A) code that modulates the GPS carrier and by comparing the resulting ranges between multiple satellites.

A great deal of position improvement is available through this technique. For instance, the C/A code in the L1 signal changes phase at 1.023 MHz, but the L1 carrier itself is 1,575.42 MHz, thus changing phase over a thousand times more often. A ±1% error in L1 carrier-phase measurement thus corresponds to a ±1.9-mm error in estimation.

In practice, RTK systems use a single base-station receiver and several mobile units. The base station re-broadcasts the phase of the GPS carrier it observes, and the mobile units compare their own phase measurements with that from the base station. This allows the units to calculate their relative position to within millimeters, although their absolute position is accurate only to the same accuracy as the computed position of the base station. The typical nominal accuracy for these systems is 1 cm ±2 ppm horizontally and 2 cm ±2 ppm vertically.

The process of integrating RTK into a sensor fusion navigation architecture is relatively straightforward. RTK does require connectivity and GNSS coverage to enable the most precise navigation. But in the event of an outage, the vehicle can employ dead reckoning and a high-performance IMU for continued safe operation.

RTK bolsters visual localization methodologies by providing precise absolute position, as well as confidence intervals for each position datum. The localization engine uses this information to reduce ambiguities and validate temporal and contextual estimates.

Inertial sensors are essential for measuring motion, and GNSS provides valuable contextual awareness about location in 3D space. Adding RTK increases accuracy, reliability and integrity. Vision sensors can enable depth perception and allow the vehicle to predict what is ahead. Data from these various sensors and technologies combine for navigational planning and decision making, to provide outcomes that are safe, precise, and predictable.

Leave a Reply