Different architectures have different “natural” data sizes. Most MCUs have the same CPU width as data bus width, which makes sense. Some MCUs might have memory that is not consistent with their available data bus width, however. In other words, for some MCUs, there can be a difference between the CPU’s “natural” word length and the word length of the available external memory bus at hand, which is not ideal. Or you may need to write code that can be used on several different target MCUs, in which case you have little choice over the matter of architecture vs. natural word length. There’s an answer for this.

If you use an 8- or 16-bit data size for a 32-bit architecture, you force the MCU to mask, shift, and sign-extend operations in order to use those smaller data types. If you use data structured at 64-bits for the same 32-bit MCU, then in order to perform operations in the 32-bit RAM or to hold all data contents structured at 32-bits wide, the 32-bit MCU will have to store 64-bit data across multiple registers, taking up more instruction cycles every single time you move or operate on that “unnatural” sized data.

This is obvious to most, as it’s common sense, but the real cost is often not realized prior to launching a project with mismatched sizes on perhaps old data and an upgraded MCU. The data should be converted, if possible, because you should use a natural size unless there’s a compelling reason not to.

Time to market might be more important than optimal code, or you might not use a natural size include using I/O and a precise number of bits is required. Or, if you know it will execute only once, it’s not going to have as negative affect on your application as data that is constantly moved around. Constant function calls for data with unnatural sizes will cause performance to degrade, however. Furthermore, sing unnatural data sizes throughout your code will cause your application to grow.

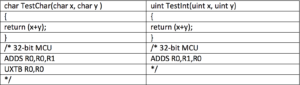

But what if you can’t avoid it, such as the case where you need to write code that will be used on several different target MCUs, you’re in a bit of a bind. Or are you?Table 1: Code on the left requires 2 cycles, vs. code on the right.

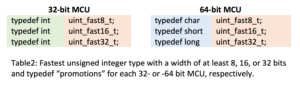

You don’t have to agonize over how to structure it, however. C11 compiler standards, for which not all tools are strictly compliant, have made available “fast” and “least” standard integer options that allow the compiler to do the agonizing for you if you can’t use a natural data size. In the standard Int, when you use a fast data size (uint_fast8_t); this standard definition tells the compiler that you need at least 8 bits to in order to hold the data, but the compiler will select the appropriate size for you such that it will allow the programmer to queue and do arithmetic operations on that int as quickly as possible.

Another that’s part of the standard int is least integer; “uint_least8_t” will consume the least amount of memory. To have fast, efficient, and portable embedded C code, using “fast” and “least” integer types is one of the easier ways to deal with unnatural data sizes or mismatched hardware that’s beyond your control. And best of all, “fast” and “least” unsigned integers (and signed integers) are portable across all standard C compilers, because it comes with the standard integer in C.

Leave a Reply