Communication protocols and protocol stacks like peripheral component interconnect express (PCIe), compute express link (CXL), Aeronautical Radio, Inc. 818 (ARINC 818), Joint Electron Device Engineering Council (JEDEC) standard 204/B/C/D (JESD204B/C/D), Fibre Channel and so on, are formal descriptions of digital message formats and rules. They are separate from the physical transport layer, although some protocols also specify the default or preferred transport technology.

In addition to providing expanded bandwidths, the use of optical connectivity can extend the reach of embedded protocols outside the box up to several hundred meters. This FAQ reviews some of the factors that impact the decision to use a wired or optical transport layer for connectivity and then presents several common embedded communications protocols including PCIe, CXL, ARINC 818, JESD204B/C/D and Fibre Channel, and how and when they are used with optical interconnects.

When to fiber

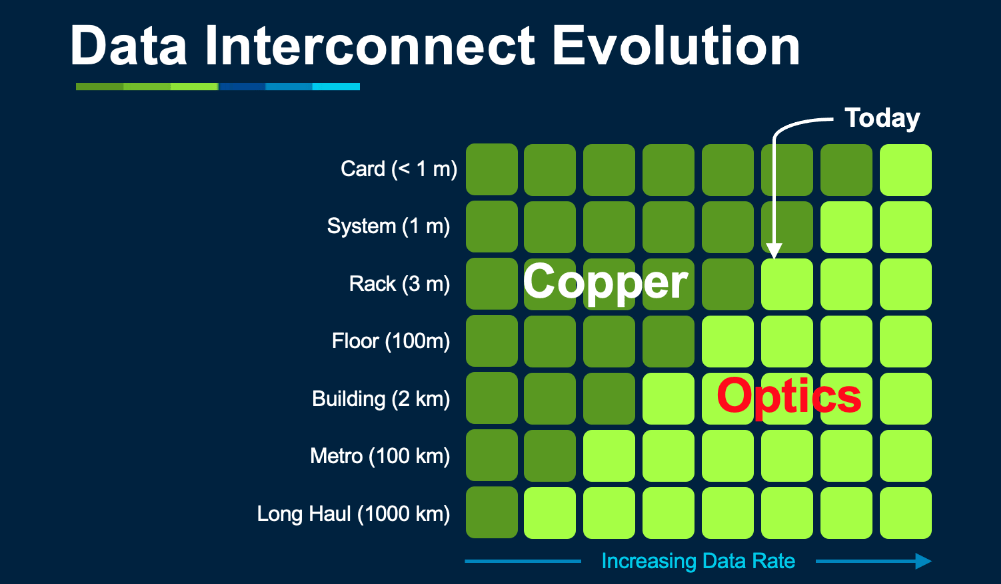

Copper is simple and low-cost to implement, especially for short distances, even up to transmission rates of several GHz. However, as the distance grows from cards to systems, racks, and up to buildings like data centers and beyond, fiber optics can provide a more cost-effective solution; as fiber optic technology continues to mature, it’s finding use over shorter distances whenever high-speed transmissions are needed (Figure 1). The benefits of optical interconnects can include higher throughput, low latency, and more power efficiency.

Numerous advantages have been claimed for fiber interconnects, and the degree of the benefits can vary for different protocols. Three key factors include:

Faster with more bandwidth – light travels faster than electricity enabling optical interconnects to transmit data faster. Fiber optic interconnects are currently 10x faster than copper solutions, and fiber continues to get faster. There are technology development roadmaps for fiber that support 800G and 1.6T Ethernet. Fiber optic cables can also be made smaller than copper interconnects, resulting in higher-density solutions.

Longer distances – conventional copper interconnects suffer from significant levels of attenuation and signal degradation when used over long distances. Optical fibers are immune to electromagnetic interference (EMI) and other sources of signal degradation, and they are non-dissipative and don’t lose signal strength. Optical interconnects can transmit data over several miles without using signal amplifiers or repeaters, but not so for copper.

Energy efficiency – transmitting photons takes much less energy than transmitting electrons. The lack of amplifiers and repeaters also contributes to lower energy consumption in fiber networks. In data centers, optical networks can require up to 75% less energy compared to copper-based solutions, and the optical connections can deliver higher performance.

PCIe

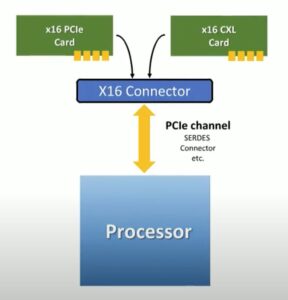

PCIe was originally developed for use over copper interconnects. More recently, PCIe over fiber has been developed to support longer-distance connectivity outside the server box. PCIe over fiber can be implemented using standard expansion cards in the hose computer (Figure 2).

The use of fiber enables PCIe bus extensions up to 500 m from the location of the main system using standard pluggable multi-mode or single-mode hot pluggable quad small form factor pluggable (QSFP) and small form factor pluggable (SFP) transceivers. Typical applications for fiber-based PCIe include:

- Isolated data acquisition systems

- High-resolution video I/O

- High-speed analog-to-digital and digital-to-analog cards

- Remote I/O and control

CXL

CXL rides on the physical and electrical interfaces of PCIe and can be implemented over fiber optic links. It leverages the PCIe 5 feature that enables alternative protocols to use the PCIe physical layer. CXL uses a flexible processor port and auto-negotiates between the PCIe standard protocol and the CXL protocol (Figure 3). CXL is aligned with the 32 Gbps PCIe 5.0 standard and is expected to be a key part of the extensions in PCIe 6.0.

CXL defines a scalable high bandwidth, low latency, cache coherent protocol to interconnect various processing and memory elements within a server as well as to enable disaggregated composable architectures within a rack. It supports efficient resource sharing for high-performance computing applications like:

- Artificial intelligence

- Machine learning

- Cloud computing

- 5G communications

JESD204

The JESD204, JESD204A, JESD204B, and the JESD204C data converter serial interface standards were developed to address the large number of high-speed data lines between DSPs or FPGAs and high-speed data converters. Alternative low voltage differential signaling (LVDS) protocols are limited to about 1 Gbps and can require large numbers of circuit board traces to support the required bandwidth. Fewer traces required by JESD204 simplifies PCB design, reduces costs, and improves reliability in bandwidth-hungry applications. JESD204 supports more than 3X the lane data rate compared with LVDS.

For example, JESD204B can operate at up to 12.5 Gbps using multiple current mode logic (CML) lanes; and since the physical interface is like gigabit Ethernet, JEDS204 can be easily implemented using optical transceivers. Using copper interconnects limits JEDS204 to short runs on PCBs or in server boxes. Using optical transceivers can extend its reach to over 100 m. JESD204 is well suited to support high-speed data conversion in applications like wireless infrastructure transceivers for 5G telephony, software-defined radios, medical imaging systems, and a range of military and aerospace applications like radar and secure communications.

ARINC 818

ARINC 818 (ADVB) is a Fibre Channel (FC) protocol based on FC audio video, defined in ANSI INCITS 356-2002. It was developed to provide high bandwidth, low latency transmission of uncompressed video feeds in avionics systems. The specification does not require the use of a specific physical layer and is implemented with both copper and fiber. Common implementations include 850 nm multimode fiber for transmissions under 500 m and 1310 nm single-mode fiber for connections up to 10 km. ARINC 818 is used in applications like slip rings and turrets in aerospace systems. When implemented using fiber, it’s low weight, EMI resistant, and can be used for long-distance transmissions, making it particularly useful on large platforms like ships.

ADVB is a point-to-point, 8b/10b-encoded (64B/66B for higher speeds) video-centric packetized serial protocol. It’s unidirectional, does not need handshaking, and has 15 defined speeds from 1 to 28 Gbps. It supports a variety of video functions like multiplexing of multiple video streams on a single link or the transmission of a single stream over a dual link. Four different synchronization classes of video are defined, from simple asynchronous to strict synchronous systems.

While it’s based on FC and developed for video applications, extended graphics array (XGA) video lines use 3072 bytes, exceeding the maximum FC payload length. Each XGA line is split into two ADVB frames. Transmitting a basic XGA image requires 1536 FC frames plus an additional ASDVB header frame. Idle characters are also needed between FC frames to support synchronization between the transmitter and receiver.

While it was originally developed for video, the platforms on which it’s deployed have increasing numbers and types of sensors, and the protocol has been adopted for sensor fusion applications that multiplex large numbers of sensor outputs on a single high-speed link. ARINC 818-2 added features specifically to enhance the utility of the protocol for sensor fusion applications.

Fibre Channel

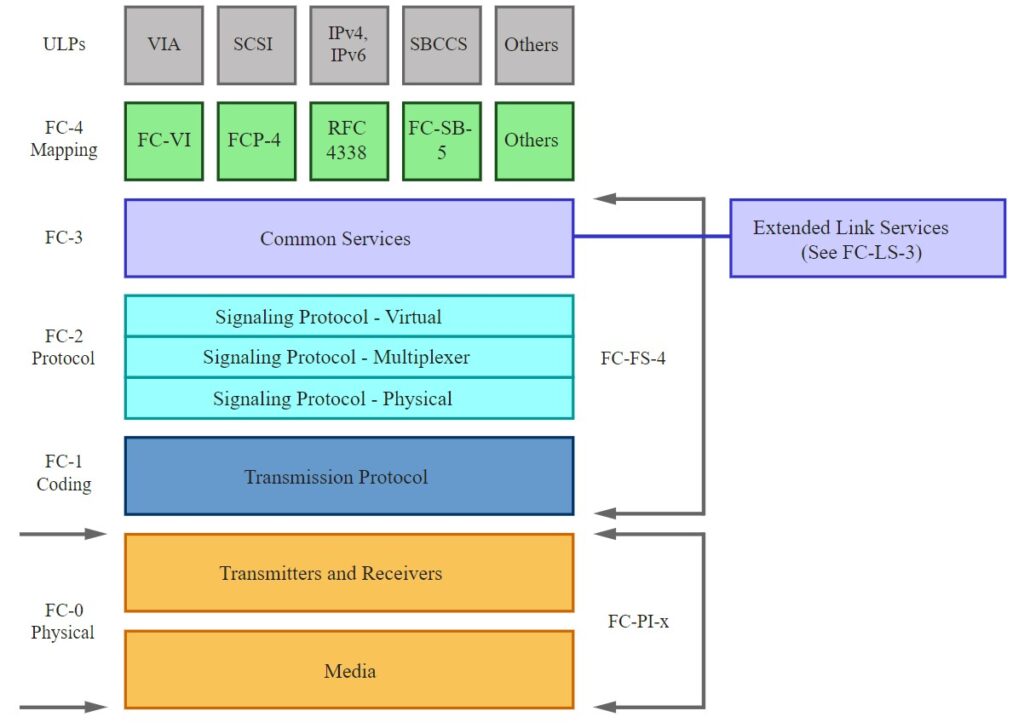

Fibre Channel (FC) was initially designed to run over fiber optic cables but has been subsequently adapted to run over copper. In contrast with the other protocols reviewed here, FC was not originally intended for embedded applications but has evolved to support embedded systems. It was developed to support data storage systems and computers in storage area networks (SANs) in data centers and was the first gigabit-class serial interconnect standard. It usually provides links up to 128 Gbps within a data center but is also used to connect multiple data centers. FC does not follow the seven-layer OSI model and is split into five layers (Figure 4):

- FC-0 – physical layer

- FC-1 – coding of transmission protocol

- FC-2 – signaling protocol includes the low-level FC network protocols

- FC-3 – common services layer that may be used for functions like encryption or RAID redundancy

- FC-4 – mapping layer for upper-level protocols like NVM Express (NVMe), SCSI, IP, and so on

Summary

The line between embedded communications protocols and longer-distance solutions is blurring. PCIe was originally developed for connections within PCs and servers, but the addition of fiber optic connectivity has extended its reach to hundreds of meters. ADVB was developed to provide high-speed FC-based video connectivity, but the specification does not require the use of a specific physical layer, is implemented with both copper and fiber depending on the application requirements, and has been expanded to support sensor fusion.

References

6 Key Benefits of Optical Interconnect Technology, STL

ARINC 818, Wikipedia

CXL Consortium

Fibre Channel, Wikipedia

JESD204 Interface Framework, Analog Devices

Understanding Compute Express Link: A Cache-coherent Interconnect, SNIA