Super Micro Computer, Inc., announced the doubling of GPU capabilities with a new 4U server supporting eight NVIDIA HGX A100 GPUs. Supermicro offers the industry’s broadest portfolio of GPU systems spanning 1U, 2U, 4U, and 10U GPU servers and SuperBlade servers over a wide range of customizable configurations.

Super Micro Computer, Inc., announced the doubling of GPU capabilities with a new 4U server supporting eight NVIDIA HGX A100 GPUs. Supermicro offers the industry’s broadest portfolio of GPU systems spanning 1U, 2U, 4U, and 10U GPU servers and SuperBlade servers over a wide range of customizable configurations.

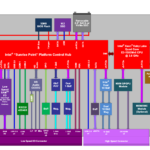

Supermicro now offers the industry’s widest and deepest selection of GPU systems with the new NVIDIA HGX A100 8-GPU server to power applications from the Edge to the cloud. The entire portfolio includes 1U, 2U, 4U, and 10U rackmount GPU systems; Ultra, BigTwin, and embedded solutions powered by both AMD EPYC and Intel Xeon processors with Intel Deep Learning Boost technology.

Leveraging Supermicro’s advanced thermal design, including custom heatsinks and optional liquid cooling, the latest high-density 2U and 4U servers feature NVIDIA HGX A100 4-GPU 8-GPU baseboards, along with a new 4U server supporting eight NVIDIA A100 PCI-E GPUs. The Supermicro’s Advanced I/O Module (AIOM) form factor further enhances networking communication with high flexibility. The AIOM can be coupled with the latest high-speed, low latency PCI-E 4.0 storage and networking devices that support NVIDIA GPUDirect RDMA and GPUDirect Storage with NVME over Fabrics (NVMe-oF) on NVIDIA Mellanox InfiniBand that feeds the expandable multi-GPU system with a continuous stream of data flow without bottlenecks. In addition, Supermicro’s Titanium Level power supplies keep the system green to realize even greater cost savings with the industry’s highest efficiency rating of 96%, while allowing redundant support for the GPUs.

This 2U system features the NVIDIA HGX A100 4-GPU baseboard with Supermicro’s advanced thermal heatsink design to maintain the optimum system temperature under full load, all in a compact form factor. The system enables high GPU peer-to-peer communication via NVIDIA NVLink, up to 8TB of DDR4 3200Mhz system memory, five PCI-E 4.0 I/O slots supporting GPUDirect RDMA as well as allowing four hot-swappable NVMe with GPUDirect Storage capability.

The new 4U GPU system features the NVIDIA HGX A100 8-GPU baseboard, up to six NVMe U.2 and two NVMe M.2, ten PCI-E 4.0 x16 slots, with Supermicro’s unique AIOM support invigorating the 8-GPU communication and data flow between systems through the latest technology stacks such as GPUDirect RDMA, GPUDirect Storage, and NVMe-oF on InfiniBand. The system uses NVIDIA NVLink and NVSwitch technology. It is ideal for large-scale deep learning training, neural network model applications for research or national laboratories, supercomputing clusters, and HPC cloud services.

The industry’s highest density GPU blade server can support up to 20 nodes and 40 GPUs with two single-width GPUs per node, or one NVIDIA Tensor Core A100 PCI-E GPU per node in Supermicro’s 8U SuperBlade enclosure. The 20 NVIDIA A100 GPUs in 8U elevates the density of computing power in a smaller footprint allowing customers to save on TCO. To support the GPU-optimized configuration and sustain the top performance and throughput needed for demanding AI applications, the SuperBlade provides a 100% non-blocking HDR 200Gb/s InfiniBand networking infrastructure to accelerate deep learning and enable real-time analysis and decision making. High density, reliability, and upgradeability make the SuperBlade a perfect building block for enterprise applications to deliver AI-powered services.

Supermicro continues its support of NVIDIA’s advanced GPUs in various form factors, optimized for customers’ unique use case scenarios and requirements. The 1U GPU systems contain up to four NVIDIA GPUs with NVLink, including NEBS Level 3 certified, 5G/Edge-ready SYS- 1029GQ. Supermicro’s 2U GPU systems, such as SYS- 2029GP-TR, can support up to six NVIDIA V100 GPUs with dual PCI-E Root complex capability in one system. And finally, the 10U GPU servers, such as SYS- 9029GP-TNVRT, supports 16 V100 SXM3 GPU expansions with to Dual Intel Xeon Scalable processors with built-in AI acceleration.

The flexible range of solutions powered by NVIDIA GPUs and GPU software from the NVIDIA NGC ecosystem provides the right building blocks for diverse tasks for organizations addressing various verticals — from AI inferencing on developed models to HPC to high-end training.

Leave a Reply