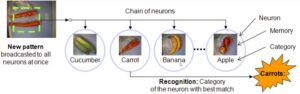

Machine learning, as a type of Artificial Intelligence, is typically used to create models with which to analyze data. Machine learning is a programming process whereby instead of coding a program as with traditional computer architectures, the computer is fed from dozens to thousands of sample data sets that demonstrate what will and will not fit the desired analytical model. A simple example of this process is pattern matching and recognition. The Intel® Curie Module, based on the Intel® Quark™ SE microcontroller, has the capability to accomplish simple pattern matching, a type of machine learning, as it has an array of 128 neurons of 128 bytes each. The neurons act as both a decision-making “engine” and memory that can be tuned with a similar “learning” technique as is done with larger neural networks. The neurons hold information that is weighted, and the “weight” given to each neural connection in the network can be adjusted as the particular algorithm that is building the analytical model is tuned.

According to Princeton Lecturer Rob Schapire, machine learning is a core subset of AI, and our understanding of intelligence necessarily includes the act of learning. Thus programming as we know it is not able to provide AI with the ability to learn on its own. As Schapire states, “The machine learning paradigm can be viewed as ‘programming by example.’”[i]

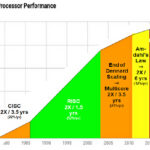

Neural networks, although embedded in semiconductor chips as traditional computer architecture (e.g., the Intel® x86 or an ARM® Cortex™ -A) do not have similar architectures, thus are structured differently, and said to be unaffected by Moore’s Law. “Programming” a neural network is in some ways easier and in other ways more difficult. Since the usual programming methods (e.g., writing C code) do not apply, it is easier because there is no programming in the traditional sense. Instead, vast neural networks are given sample sets of data of correct and incorrect samples that both build and shape the analytical model with each new sample of data. Thus, the difficult part is to find up to thousands of data that fit and do not fit the desired analytical model. Modeled after the human brain, which learns and prunes data based on what the person experiences, artificial intelligence using neural networks can develop what does and does not fit a model and also can change over time with changing data. The results are amazingly good with large neural networks that can, for instance, recognize patterns, objects, or behaviors with respect to movements on a video stream and flag those that do not fit the learned construct that has been established based on all prior samples. In this way, machines can see, decide on a match (or not) and reject or pass through objects on an assembly line, for instance. And they can now do this more accurately and faster than humans. Some examples of AI used in industry:

- Security monitoring. E.g., an unauthorized object enters a monitored area. The AI process alerts a person for further inspection

- Fraud protection. E.g., After months of monitoring your past and recent credit usage patterns, an AI process denies a transaction that does not match your spending patterns and the bank alerts you via text to contact them.

- Spam filtering

- Text-to-Speech

- Object recognition despite orientation or disorder of objects in the image

- Preference predictions for online suggestions (in advertising or with services like Netflix.)

“Deep learning” is more involved than, but still considered a subset of, machine learning. Deep learning requires many more layers of neurons forming larger neural networks than machine learning. Deep learning, as established by the Google-acquired company DeepMind a few years back, has demonstrated AI that can learn with fewer data and a more computing-intensive concept that DeepMind calls reinforcement learning.[ii] However, the industry is relatively new, and no standard definitions exist for many concepts related to AI. IBM terms their AI engine, Watson, as “cognitive computing.”

As for hardware, machine learning requires semiconductor-based, high-performance processing power that includes parallel processing. Intel’s approach to AI is to offer high-performance, tuned-for AI CPUs, whereas Nvidia’s strategy is to provide GPUs in parallel for extremely fast computations. There is some discussion that Nvidia will need to continue scaling many multiples of GPUs operating in parallel to grow with the AI industry, and whether this challenge can be met by GPUs. This remains to be seen, but AI (conceived in the 1950s) has reached dominance again after decades of near neglect due to affordable high-performance computing. Affordable high-performance parallel and distributed processing has been a tipping point for AI in terms of accessibility through affordability: high-performance computing has never been cheaper, and of course marketplace industries, including Google, Facebook, Microsoft, Amazon and countless others are going to exploit whatever technology can push their services or applications to the leading edge.

[i] Schapire, Rob. “Theoretical Machine Learning.” Lecture #1. New Jersey, Princeton. 23 Nov. 2016. Reading. http://www.cs.princeton.edu/courses/archive/spr08/cos511/scribe_notes/0204.pdf

[ii] Regalado, Antonio. “Is Google Cornering the Market on Deep Learning?” MIT Technology Review. N.p., 29 Jan. 2014. Web. 24 Nov. 2016.

Leave a Reply