At some point in the not-too-distant future, it’s expected that quantum computing will pose a security risk to all currently used encryption techniques. Most current encryption methods are based on the challenges associated with factoring large numbers into their prime factors. They provide good levels of security when classical computers are being used, but quantum […]

FAQ

Post-quantum crypto standardization — what’s the end game?

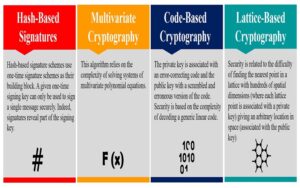

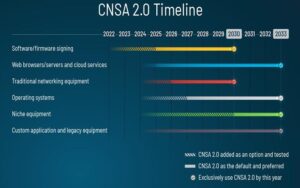

The post-quantum cryptography (PQC) standardization program being run by the National Institute of Standards and Technology (NIST) is developing encryption algorithms to protect classical computers from attacks by quantum computers. NIST has announced the first four PQC algorithms and is preparing them for final development. In the meantime, organizations of all types are analyzing the […]

What’s a quantum processing unit?

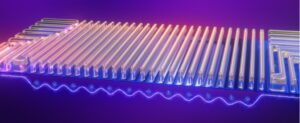

Quantum processing units (QPUs) are the quantum equivalent of microcontrollers (MCUs) in electronic equipment. QPUs and the quantum computers that use them are evolving and heading toward large-scale computing. This FAQ looks at current quantum computing architectures and how they are evolving and reviews currently available QPUs and efforts to develop QPUs based on superconductivity […]

IoT: Microcontrollers and sensors must work as a team

Get an understanding of how to select sensors and microcontrollers for your IoT product, with an emphasis on circuit design to optimize performance. Sensors in IoT devices collect data from the environment, after which the data can be processed and analyzed to make intelligent decisions. The quality of your sensors and how well you integrate […]

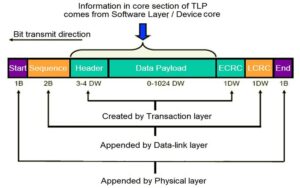

How does the PCIe protocol stack work?

The Peripheral Component Interconnect Express (PCIe) is a high-speed serial computer expansion bus standard. It’s the common motherboard interface in systems from personal computers to servers and storage devices and is used with graphics cards, sound cards, hard disk drive host adapters, SSDs, Wi-Fi, and Ethernet hardware connections. It uses a three-layer protocol stack to […]

What are the five types of quantum computers?

There are several ways to make the quantum bits (qubits) that comprise quantum computers. The classic image of the “chandelier” descending into a cryostat only represents qubits that need to operate near 0 Kelvin (K). They are used by IBM, Google, and others, but those pictures present a very limited view of quantum computing. This […]

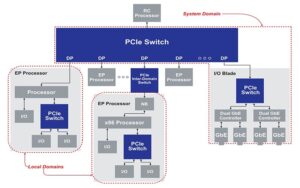

What’s a PCIe root complex?

The root complex in PCI Express (PCIe) is the intermediary between the system’s central processing unit (CPU), memory, and the PCIe switch fabric that includes one or more PCIe or PCI devices. It uses the link training and status state machine (LTSSM) to manage connected PCIe devices. The LTSSM detects, polls, configures, recovers, resets, and […]

Post-quantum crypto standardization — what’s next?

Analysis and possible pushback from users may be among the next steps in the development of post-quantum cryptography (PQC) by the National Institute of Standards and Technology (NIST). NIST has started the process of standardizing the four finalist algorithms. That’s the last step before developing the mathematical tools and making the tools available so developers […]

Post-quantum crypto standardization — where we are

Post quantum cryptography (PQC) standardization is a program being run by the National Institute of Standards and Technology (NIST). It began in 2016, and by the end of 2017, NIST had received 82 submissions, of which 69 were accepted for further consideration. Through successive rounds of elimination, the number of proposals dropped from 69 to […]

How many PCIe card sizes exist today, and where are they used?

There are five standard sizes for PCIe cards and two sizes of mini PCIe. In addition, some larger PCIe slots can accommodate more than one card using a process called bifurcation. This FAQ looks at the five standard sizes and common uses for them. Then turns to mini PCIe and its applications and closes with […]