The unique and flexible computational architecture of RISC-V can be leveraged by designers of massive parallel computational storage solutions, hard disc drives (HDDs) and solid-state disk drives (SSDs), and memory fabric architectures such as Ethernet Bunch of Flash (EBOF) or Just a Bunch of Flash (JBOF) to implement high-performance solutions. This article will look at the use of RISC-V memory management in system-on-chip (SoC) devices, how RISC-V enhances the performance of HDDs, how RISC-V can be used with both HDDs and SSDs in computational storage applications, and it closes with a look at two open-source fabric architectures that are being optimized for RISC-V.

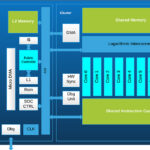

Domain-specific accelerators (DSAs), such as compression/decompression units, random number generators, and network packet processors, are becoming increasingly common in SoC designs. A standard I/O interconnect such as an Advanced eXtensible Interface (AXI) bus is typically used to connect the DSA and the core. RISC-V-based SoCs can be used in novel ways to connect a DSA with the core and optimize high-bandwidth data transfers. In a typical architecture, a direct memory access (DMA) engine is used to connect the DSA to banks of double data rate (DDR), low-power DDR (LPDDR), or high bandwidth memories (HBM). RISC-V supports the implementation of application-specific capabilities that enable massive parallel computational storage solutions.

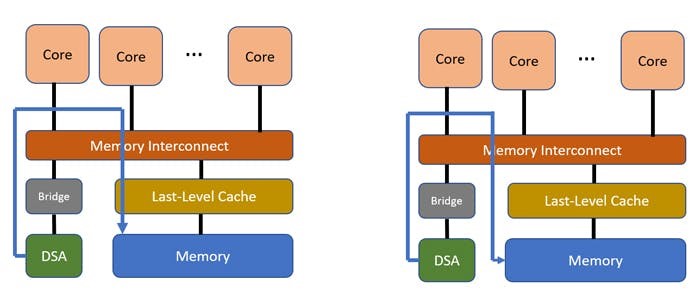

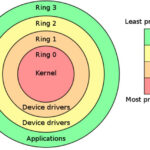

In the conventional approach using a DMA engine (on the left in the figure below), data transfers often involve allocating the Last-Level Cache (LLC) data first. If the volume of transferred data is greater than the LLC size, this can significantly slow down accesses.

The use of RISC-V enables an alternative approach (on the right in the figure) where data can be written directly to memory, bypassing the LLC. The data can be marked as uncached and written directly to memory, or it can be marked as cached, and the DMA engine can tell the LLC not to cache the memory but to write it to memory. The second approach is more complex since the data is marked as cacheable, and any other cached copies of the data must be invalidated within the processor complex.

RISC-V offers several options for connecting the core and a DSA. A typical implementation using a mesh topology has a fixed path routing, and the interconnect can guarantee the ordering and thereby allow very high bandwidth access to DSA memory. RISC-V also includes two optional modes of I/O ordering. The very conservative I/O ordering mode can be used selectively to guarantee strong ordering as required. RISC-V also offers a relaxed ordering mode for high bandwidth applications where I/O loads and stores can be reordered.

HDDs, SSDs, and computational storage

RISC-V processors bring several benefits to HDDs, SSDs, and computational storage. Starting with HDDs, RISC-V can support ultra-precision motion control positioning that future generations will require of devices. Within five years, HDD capacities are expected to reach 50TB. Positioning accuracy capable of moving to a spot on the disk that is 30,000 times smaller than the width of a strand of human hair will be required to achieve that capacity level. Realizing that level of precision at the necessary speeds requires massive real-time computations. The ability to customize the RISC-V ISA is expected to be a key factor in achieving that performance.

For example, engineers at Seagate recently designed two RISC-V cores for HDD applications. The lower performance core is optimized for lightweight and security-critical designs. The higher performance core delivers up to triple the performance in real-time HDD applications than current non-RISC-V solutions.

Makers of HDDs and SSDs expect the benefits of using RISC-V to bring lower latency, power savings, higher drive capacities, internal computational capabilities in storage drives, and improved security for data created at the edge of the network. Computational storage devices, both HDD-based, and SSD-based are not designed to replace the high-performance CPUs in servers; they are expected to collaborate with and augment the processing efficiency of the system.

RISC-V-based computational HDDs will be able to process data at the drive level instead of moving it to application servers. That will result in reduced network traffic and eliminate I/O bottlenecks. If they are commercially successful, these HDD-based computational drives will provide a lower-cost alternative to computational SSDs. Real-time analytics, artificial intelligence, and machine learning tasks that rely on data from edge devices are expected to benefit from the development of computational HDDs especially.

Memory fabrics, EBOF and JBOF

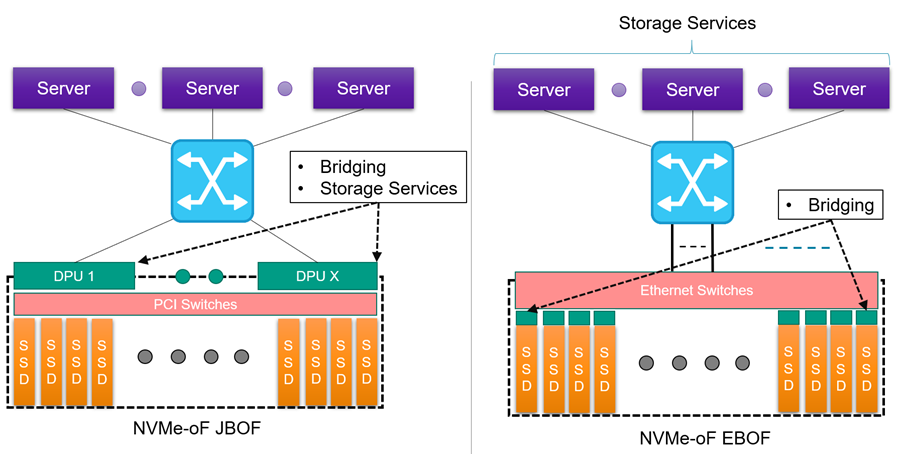

The two common ways to connect a “bunch of flash” memory into a nonvolatile memory express over fabric (NVMe-oF) network are to use Ethernet to create an Ethernet bunch of flash (EBOF) or a PCIe switch to create just a bunch of flash (JBOF). Both an EBOF and a JBOF connect back to the servers using NVMe-oF.

Other than the Ethernet versus PCIe switching, the main difference between JBOF and EBOF is where the NVMe to NVMe-oF conversion occurs. The conversion of bridging for a JBOF occurs at the periphery of the shelf using one or more DPUs. In an EBOF, the bridging occurs within the SSD carrier or enclosure. There are tradeoffs between these two approaches: a JBOF uses the (local) processing capabilities of the DPU to run storage services to deliver better overall system performance, but that can be a potential bottleneck and is more expensive consumes more power compared with the EBOF approach.

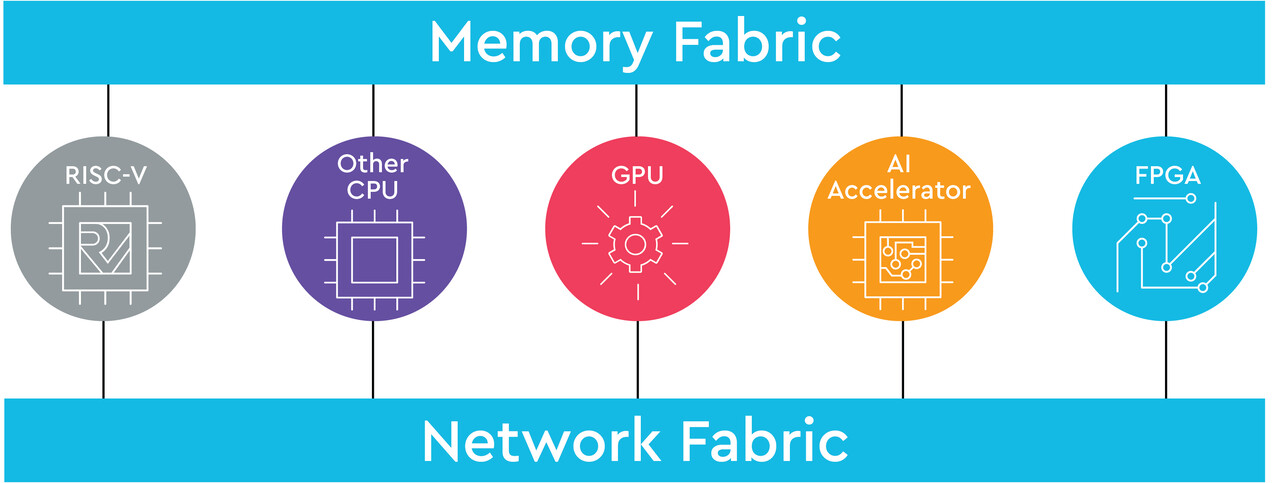

RISC-V International and CHIPS (Common Hardware for Interfaces, Processors, and Systems) Alliance have entered into a joint collaboration to update the OmniXtend Cache Coherency specification and protocol, along with building out developer tools for OmniXtend. Originally developed by Western Digital, OmniXtend is an open cache coherence protocol utilizing the programmability of modern Ethernet switches to enable processors’ caches, memory controllers, and accelerators to exchange coherence messages directly over an Ethernet-compatible fabric. It is an open solution for efficiently attaching persistent memory to processors and offers potential future advanced fabrics that connect compute, storage, memory, and I/O components.

A joint OmniXtend working group has been formed, which will focus on creating an open, cache-coherent, unified memory standard for multicore compute architectures. The OmniXtend specification and protocol will be updated. The architectural simulation models will be extended, a reference register-transfer level (RTL) implementation will be developed, and a verification workbench will be established.

RISC-V and Gen-Z fabric

Gen-Z is an open-system fabric-based architecture designed to interconnect processors, memory devices, and accelerators. Gen-Z supports a wide range of applications by enabling resource provisioning and sharing to provide flexible system configurations as application needs for different resources change. Gen-Z was developed to provide increased performance, higher bandwidth, and lower latencies, software efficiency, power optimization, and improved security. Some of the features of the Gen-Z fabric include:

- The memory and memory controllers are abstracted in Gen-Z, supporting the deployment of a wide range of memory media types. Multiple generations of memory devices and new storage media technologies can be transparently supported.

- Various components such as FPGA accelerators, memory modules, nonvolatile memory modules, and GPUs can be taken offline and independently replaced and upgraded as needed.

- The Gen-Z fabric is reconfigurable and can accommodate varying resource requirements of existing and yet-to-be-developed memory-centric applications.

- Gen-Z fabric management is secure and robust, and compatible with existing management tools. It utilizes industry-standard protocols such as management component transport protocol (MCTP) to enable rapid deployment.

- Gen-Z supports a robust hardware-enforced zoning and security framework to protect the fabric from cyber threats.

- Interoperability enables Gen-Z to efficiently transport standard and customized communications between multiple components, enabling customers and vendors to innovate and deploy new capabilities and services rapidly.

- Gen-Z’s open specifications enable it to be integrated into any solution free of charge and with no constraints on re-use.

RISC-V’s large address space, secured privileged execution environment, and extensible ISA compliment Gen-Z’s scalability, built-in security, and hardware-enforced isolation and robust and easily extensible functionality. In addition, multiple processors and accelerator innovation opportunities are using Gen-Z, including; Gen-Z Memory Management Unit (ZMMU) to support memory at any scale transparently, PCIe® Enhanced Configuration Access Method (PECAM), and Logical PCIe Devices (LPD) to enable massive scale-out storage solutions without requiring additional interconnects and gateways to be inserted between the processors or accelerators and data.

Summary

As shown, the unique and flexible computational architecture of RISC-V can be leveraged by designers of massive parallel computational storage solutions, HDDs and SSDs, and memory fabric architectures such as EBOF or JBOF to implement high-performance solutions. In addition, open-source fabric architectures are being optimized for RISC-V.

References

Accelerating Innovation Using RISC-V and Gen-Z, Gen Z Consortiumzzz

CHIPS Alliance

High-Bandwidth Accelerator Access to Memory: Enabling Optimized Data Transfers with RISC-V, SiFive

Seagate, Western Digital outline progress on RISC-V designs, RISC-V International

Leave a Reply